Sometimes you might do something stupid, in the hurry like running the wrong rm command and ending up deleting /var/lib/dpkg on your Debian / Ubuntu system.

by either wrongly issuing the rm to a directory or mistyping rm -r /var/lib/dpkg.

I know this is pretty dumb but sometimes we're all dumb, if you do so and you try to do the regular

root@debian:/ # apt update && apt upgrade

or try to install some random package onwards you will end up with error message:

E: Could not open lock file /var/lib/dpkg/lock – open (2: No such file or directory)

Ending up with this error, does totally blocks your further system administration activities with both apt / aptitude / apt-get as well as with dpkg package management tool.

1. The /var/backups recovery directory

Thankfully, by Gods mercy some of Debian Linux system architects has foreseen such issues might occur and have integrated into it the automatic periodic creation of some important files into directory /var/backups/

Hence the next step is to check what kind of backups are available, there:

root@debian:/ # ls -al /var/backups/

total 19892

drwxr-xr-x 7 root root 4096 Sep 24 06:25 ./

drwxr-xr-x 22 root root 4096 Dec 21 2020 ../

-rw-r–r– 1 root root 245760 Aug 20 06:25 alternatives.tar.0

-rw-r–r– 1 root root 15910 Aug 14 06:25 alternatives.tar.1.gz

-rw-r–r– 1 root root 15914 May 29 06:25 alternatives.tar.2.gz

-rw-r–r– 1 root root 15783 Jan 29 2021 alternatives.tar.3.gz

-rw-r–r– 1 root root 15825 Nov 20 2020 alternatives.tar.4.gz

-rw-r–r– 1 root root 15778 Jul 16 2020 alternatives.tar.5.gz

-rw-r–r– 1 root root 15799 Jul 4 2020 alternatives.tar.6.gz

-rw-r–r– 1 root root 80417 Aug 19 14:48 apt.extended_states.0

-rw-r–r– 1 root root 8693 Apr 27 22:40 apt.extended_states.1.gz

-rw-r–r– 1 root root 8658 Apr 17 19:45 apt.extended_states.2.gz

-rw-r–r– 1 root root 8601 Apr 15 00:52 apt.extended_states.3.gz

-rw-r–r– 1 root root 8599 Apr 9 00:26 apt.extended_states.4.gz

-rw-r–r– 1 root root 8542 Mar 18 2021 apt.extended_states.5.gz

-rw-r–r– 1 root root 8549 Mar 18 2021 apt.extended_states.6.gz

-rw-r–r– 1 root root 9030483 Jul 4 2020 aptitude.pkgstates.0

-rw-r–r– 1 root root 628958 May 7 2019 aptitude.pkgstates.1.gz

-rw-r–r– 1 root root 534758 Oct 21 2017 aptitude.pkgstates.2.gz

-rw-r–r– 1 root root 503877 Oct 19 2017 aptitude.pkgstates.3.gz

-rw-r–r– 1 root root 423277 Oct 15 2017 aptitude.pkgstates.4.gz

-rw-r–r– 1 root root 420899 Oct 14 2017 aptitude.pkgstates.5.gz

-rw-r–r– 1 root root 229508 May 5 2015 aptitude.pkgstates.6.gz

-rw-r–r– 1 root root 11 Oct 14 2017 dpkg.arch.0

-rw-r–r– 1 root root 43 Oct 14 2017 dpkg.arch.1.gz

-rw-r–r– 1 root root 43 Oct 14 2017 dpkg.arch.2.gz

-rw-r–r– 1 root root 43 Oct 14 2017 dpkg.arch.3.gz

-rw-r–r– 1 root root 43 Oct 14 2017 dpkg.arch.4.gz

-rw-r–r– 1 root root 43 Oct 14 2017 dpkg.arch.5.gz

-rw-r–r– 1 root root 43 Oct 14 2017 dpkg.arch.6.gz

-rw-r–r– 1 root root 1319 Apr 27 22:28 dpkg.diversions.0

-rw-r–r– 1 root root 387 Apr 27 22:28 dpkg.diversions.1.gz

-rw-r–r– 1 root root 387 Apr 27 22:28 dpkg.diversions.2.gz

-rw-r–r– 1 root root 387 Apr 27 22:28 dpkg.diversions.3.gz

-rw-r–r– 1 root root 387 Apr 27 22:28 dpkg.diversions.4.gz

-rw-r–r– 1 root root 387 Apr 27 22:28 dpkg.diversions.5.gz

-rw-r–r– 1 root root 387 Apr 27 22:28 dpkg.diversions.6.gz

-rw-r–r– 1 root root 375 Aug 23 2018 dpkg.statoverride.0

-rw-r–r– 1 root root 247 Aug 23 2018 dpkg.statoverride.1.gz

-rw-r–r– 1 root root 247 Aug 23 2018 dpkg.statoverride.2.gz

-rw-r–r– 1 root root 247 Aug 23 2018 dpkg.statoverride.3.gz

-rw-r–r– 1 root root 247 Aug 23 2018 dpkg.statoverride.4.gz

-rw-r–r– 1 root root 247 Aug 23 2018 dpkg.statoverride.5.gz

-rw-r–r– 1 root root 247 Aug 23 2018 dpkg.statoverride.6.gz

-rw-r–r– 1 root root 3363749 Sep 23 14:32 dpkg.status.0

-rw-r–r– 1 root root 763524 Aug 19 14:48 dpkg.status.1.gz

-rw-r–r– 1 root root 760198 Aug 17 19:41 dpkg.status.2.gz

-rw-r–r– 1 root root 760176 Aug 13 12:48 dpkg.status.3.gz

-rw-r–r– 1 root root 760105 Jul 16 15:25 dpkg.status.4.gz

-rw-r–r– 1 root root 759807 Jun 28 15:18 dpkg.status.5.gz

-rw-r–r– 1 root root 759554 May 28 16:22 dpkg.status.6.gz

drwx—— 2 root root 4096 Oct 15 2017 ejabberd-2017-10-15T00:22:30.p1e5J8/

drwx—— 2 root root 4096 Oct 15 2017 ejabberd-2017-10-15T00:24:02.dAUgDs/

drwx—— 2 root root 4096 Oct 15 2017 ejabberd-2017-10-15T12:29:51.FX27WJ/

drwx—— 2 root root 4096 Oct 15 2017 ejabberd-2017-10-15T21:18:26.bPQWlW/

drwx—— 2 root root 4096 Jul 16 2019 ejabberd-2019-07-16T00:49:52.Gy3sus/

-rw——- 1 root root 2512 Oct 20 2020 group.bak

-rw——- 1 root shadow 1415 Oct 20 2020 gshadow.bak

-rw——- 1 root root 7395 May 11 22:56 passwd.bak

-rw——- 1 root shadow 7476 May 11 22:56 shadow.bak

Considering the situation the important files for us that could, help us restore our previous list of packages, we had installed on the Debian are files under /var/backups/dpkg.status*

Luckily debian based systems keeps backups of its important files that can be used later on for system recovery activities.

Below is a common structure of /var/lib/dpkg on a deb based system.

hipo@debian:/home/hipo$ ls -l /var/lib/dpkg/

total 11504

drwxr-xr-x 2 root root 4096 Aug 19 14:33 alternatives/

-rw-r–r– 1 root root 11 Oct 14 2017 arch

-rw-r–r– 1 root root 2199402 Oct 19 2017 available

-rw-r–r– 1 root root 2197483 Oct 19 2017 available-old

-rw-r–r– 1 root root 8 Sep 6 2012 cmethopt

-rw-r–r– 1 root root 1319 Apr 27 22:28 diversions

-rw-r–r– 1 root root 1266 Nov 18 2020 diversions-old

drwxr-xr-x 2 root root 606208 Sep 23 14:32 info/

-rw-r—– 1 root root 0 Sep 23 14:32 lock

-rw-r—– 1 root root 0 Mar 18 2021 lock-frontend

drwxr-xr-x 2 root root 4096 Sep 17 2012 parts/

-rw-r–r– 1 root root 375 Aug 23 2018 statoverride

-rw-r–r– 1 root root 337 Aug 13 2018 statoverride-old

-rw-r–r– 1 root root 3363749 Sep 23 14:32 status

-rw-r–r– 1 root root 3363788 Sep 23 14:32 status-old

drwxr-xr-x 2 root root 4096 Aug 19 14:48 triggers/

drwxr-xr-x 2 root root 4096 Sep 23 14:32 updates/

2. Recreate basic /var/lib/dpkg directory and files structures

As you can see, there are 5 directories and the status file and some other files.

Hence the first step is to restore the lost directory structure.

hipo@debian: ~$ sudo mkdir -p /var/lib/dpkg/{alternatives,info,parts,triggers,updates}

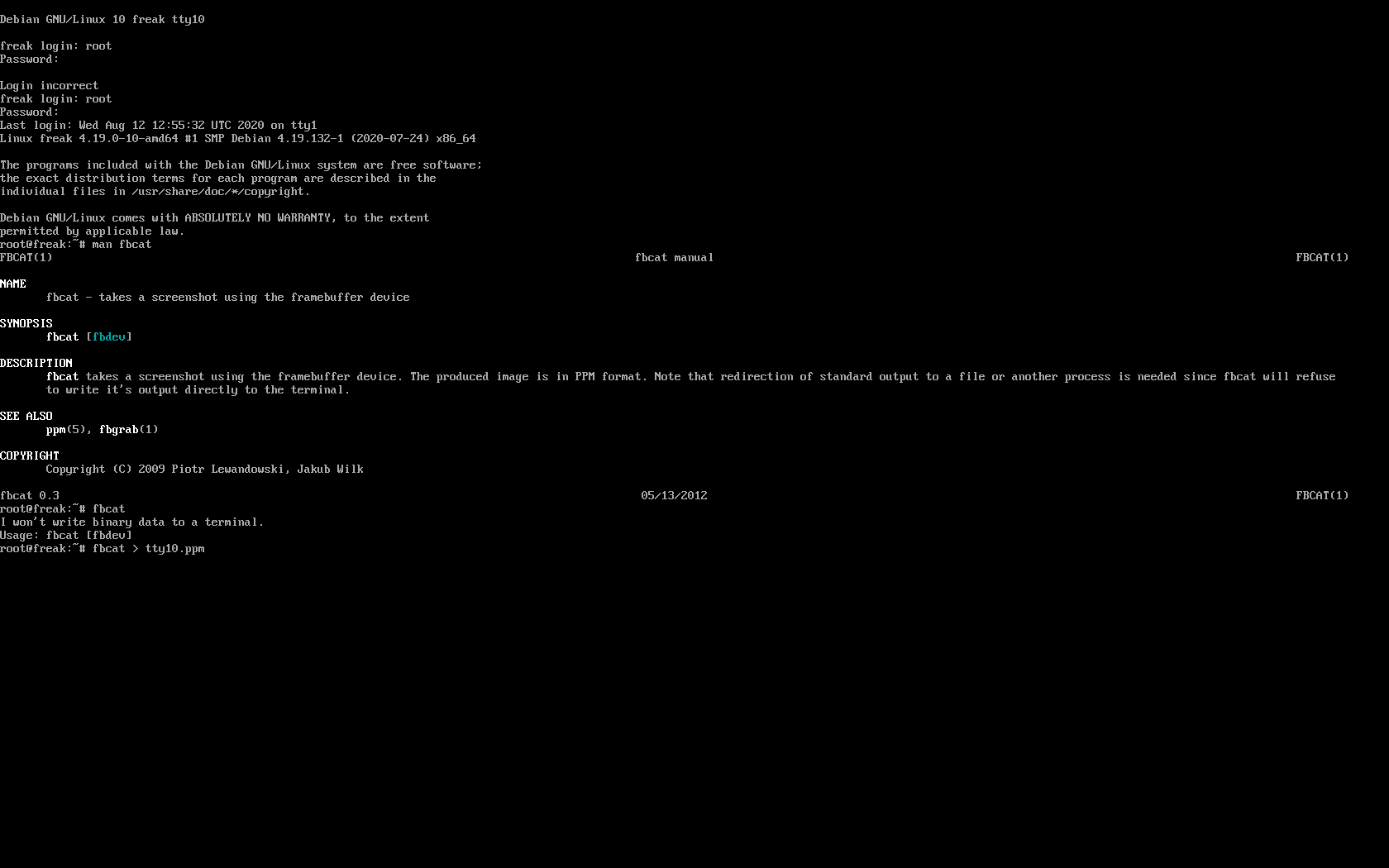

3. Recover /var/lib/dpkg/status file

Further on recover the dpkg status file from backup

hipo@debian: ~$ sudo cp /var/backups/dpkg.status.0 /var/lib/dpkg/status

4. Check dpkg package installation works again and reinstall base-files

Next check if dpkg – debian package manager is now working, by simply trying to download dpkg*.deb reinstalling it.

root@debian:/root # apt-get download dpkg

# sudo dpkg -i dpkg*.deb

If you get no errors next step is to reinstall base-files which is important package on which dpkg depends.

root@debian:/root # apt-get download base-files

…

root@debian:/root # sudo dpkg -i base-files*.deb

…

5. Update deb system package list and db consistency

Onwards try to update system package list and check dpkg / apt database consistency.

root@debian:/root # dpkg –audit

…

root@debian:/root # sudo apt-get update

…

root@debian:/root # sudo apt-get check

…

The result should be more of the files in /var/lib/dpkg should appear, thus list the directory again and compare to the earlier given list of it, they should be similar.

root@debian:/root # ls -l /var/lib/dpkg

…

6. Reinstall completely from source code dpkg, if nothing else works

If some files are missing they should get created with a normal daily sysadmin package management tasks so no worries.

In case if after attempting to upgrade the system or install a package with apt, you get some nasty error like:

'/usr/local/var/lib/dpkg/status' for reading: No such file or directory

Then the next and final thing to try as a recovery is to download compile from a new and reinstall dpkg from source code!

root@debian:/ # wget https://launchpad.net/ubuntu/+archive/primary/+files/dpkg_1.16.1.2ubuntu7.2.tar.bz2

root@debian:/ # tar -xvf dpkg_1.16*

…

root@debian:/ # cd dpkg-1.16*

…

root@debian:/ # ./configure

…

root@debian:/ # make

…

root@debian:/ # make install

…

Hopefully you'll have gcc and development tools provided by build-essential .deb package , otherwise you have to download and compile this ones as well 🙂

If this doesn't bring you back the installed packages you had priorly (hopefully not), then waste no more time and do a backup of the main things on the server, and reinstall it completely.

The moral out of this incident is always to implement always to your system a good back up system and regularly create backups of /var/lib/dpkg , /etc/ , /usr/local* and other important files on a remote backup server, to be able to easily recover if you do by mistake something whacky.

Hope that helped anyone. Cheers 🙂