Posts Tagged ‘someone’

Wednesday, May 2nd, 2012

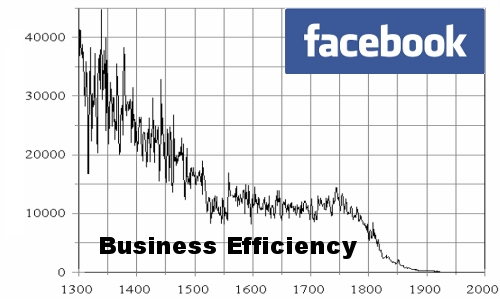

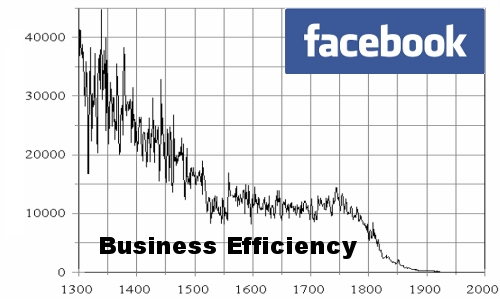

I don't know if someone has thought about this topic but in my view Facebook use in organizations has a negative influence on companies overall efficiency!

Think for a while, facebook's website is one of the largest Internet based "people stealing time machine" so to say. I mean most people use facebook for pretty much useless stuff on daily basis (doesn't they ??). The whole original idea of facebook was to be a lay off site for college people with a lot of time to spend on nothing.

Yes it is true some companies use facebook succesfully for their advertising purposes and sperading the awareness of a company brand or product name but it is also true that many companies administration jobs like secretaries, accountants even probably CEOs loose a great time in facebook useless games and picture viewing etcetera.

Even government administration job positioned people who have access to the internet access facebook often from their work place. Not to mention, the mobility of people nowdays doesn't even require facebook to be accessed from a desktop PC. Many people employeed within companies, who does not have to work in front of a computer screen has already modern mobile "smart phones" as the business people incorrectly call this mini computer devices which allows them to browse the NET including facebook.

Sadly Microsoft (.NET) programmers and many of the programmers on various system platforms developers, software beta testers and sys admins are starting to adopt this "facebook loose your time for nothing culture". Many of my friends actively use the Facebook, (probably) because they're feeling lonely in front of the computer screen and they want to have interaction with someone.

Anyways, the effect of this constant fb use and aline social networks is clear. If in the company the employeed personal has to do work on the computer or behind any Internet plugged device, a big time of the use of the device is being 'invested' in facebook to kill some time instead of investing the same time for innovation within the company or doing the assigned tasks in the best possible way

Even those who use facebook occasionally from their work place (by occasionally I mean when they don't have any work to do on the work place), they are constantly distracted (focus on work stealed) by the hanging opened browser window and respectively, when it comes to do some kind of work their work efficiency drops severely.

You might wonder how do I know that facebook opened browser tab would have bad interaction with the rest of the employee work. Well let me explain. Its a well known scientifically proven fact that the human mind is not designed to do simultaneously multiple tasks (we're not computers, though even computers doesn't work perfect when simultaneous tasks are at hand.).

Therefore using facebook in parallel with their daily job most people nowdays try to "multi task" their job and hence this reflects in poor work productivity per employee. The chain result cause of the worsened productivity per employee is therefore seen in the end of the fiscal quarter or fiscal year in bad productivity levels, bad or worsened quality of product and hence to poor financial fiscal results.

I've worked before some time for company whose CEO has realized that the use of certain Internet resources like facebook, gmail and yahoo mail – hurts the employee work productivity and therefore the executive directors asked me to filter out facebook, GMAIL and mail.yahoo as well as few other website which consumed a big portion of the employees time …

Well apparantly this CEO was smart and realized the harm this internet based resources done to his business. Nowdays however many company head executives did not realize the bad effect of the heavy use of public internet services on their work force and never ask the system administrator to filter out this "employees efficiency thefts".

I hope this article, will be eventually red by some middle or small sized company with deteriorating efficiency and this will motivate some companies to introduce an anti-facebook and gmail use policy to boost up the company performance.

As one can imagine, if you sum up all the harm all around the world to companies facebook imposed by simply exposing the employees to do facebooking and not their work, this definitely worsenes the even severe economic crisis raging around …

The topic of how facebook use destroyes many businesses is quite huge and actually probably I'm missing a lot of hardmful aspects to business that can be imposed by just a simple "innocent facebook use", so I will be glad to hear from people in comments, if someone at all benefits of facebook use in an company office (I seriously doubt there is even one).

Suppose you are a company that does big portion of their job behind a computer screen over the internet via a Software as a Service internet based service, suppose you have a project deadline you have to match. The project deadline is way more likely to be matched if you filter out facebook.

Disabling access to facebook of employees and adding company policy to prohibit social network use and rules & regulations prohibiting time consuming internet spaces should produce good productivity results for company lightly.

Though still the employees can find a way to access their out of the job favourite internet services it will be way harder.

If the employee work progress is monitored by installed cameras, there won't be much people to want to cheat and use Facebook, Gmail or any other service prohibited by the company internal codex

Though this are a draconian measures, my personal view is that its better for a company to have such a policy, instead of pay to their emloyees to browser facebook….

I'm not aware what is the situation within many of the companies nowdays and how many of them prohibit the fb, hyves, google plus and the other kind of "anti-social" networks.

But I truly hope more and more organizations chairman / company management will comprehend the damages facebook makes to their business and will issue a new policy to prohibit the use of facebook and the other alike shitty services.

In the mean time for those running an organization routing its traffic through a GNU / Linux powered router and who'd like to prohibit the facebook use to increase the company employees efficiency use this few lines of bash code + iptables:

#!/bin/sh

# Simple iptables firewall rules to filter out www.facebook.com

# Leaving www.facebook.com open from your office will have impact on employees output ;)

# Written by hip0

# 05.03.2012

get_fb_network=$(whois 69.63.190.18|grep CIDR|awk '{ print $2 }');

/sbin/iptables -A OUTPUT -p tcp -d ${get_fb_network} -j DROP

Here is also the same filter out facebook, tiny shell script / blocks access to facebook script

If the script logic is followed I guess facebook can be disabled on other company networks easily if the router is using CISCO, BSD etc.

I will be happy to hear if someone did a research on how much a company efficiency is increased whether in the company office facebook gets filtered out. My guess is that efficiency will increase at least with 30% as a result of prohibition of just facebook.

Please drop me a comment if you have an argument against or for my thesis.

Tags: awareness, basis, beta testers, college, companies administration, company businesses, computer devices, computer screen, daily basis, Desktop, doesn, etcetera, facebook, government, government administration, interaction, job, Linux, linux routers, machine, Microsoft, mini computer, negative influence, organization network, place, quot, screen, smart phones, social networks, someone, stealing time, succesfully, sys admins, system platforms, time, time machine, topic, useless stuff, work

Posted in Business Management, System Administration | 2 Comments »

Monday, April 30th, 2012 I'm used to making picture screenshots in GNOME desktop environment. As I've said in my prior posts, I'm starting to return to my old habits of using console ttys for regular daily jobs in order to increase my work efficiency. In that manner of thoughts sometimes I need to take a screenshot of what I'm seeing in my physical (TTY consoles) to be able to later reuse this. I did some experimenting and this is how this article got born.

In this post, I will shortly explain how a picture of a command running in console or terminal in GNU / Linux can be made

Before proceeding to the core of the article, I will say few words on ttys as I believe they might be helpful someone.

The abbreviation of tty comes after TeleTYpewritter phrase and is dating back somewhere near the 1960s. The TTY was invented to help people with impaired eyesight or hearing to use a telephone like typing interface.

In Unix / Linux / BSD ttys are the physical consoles, where one logs in (typing in his user/password). There are physical ttys and virtual vtys in today *nixes. Today ttys, are used everywhere in a modern Unixes or Unix like operating system with or without graphical environments.

Various Linux distributions have different number of physical consoles (TTYs) (terminals connected to standard output) and this depends mostly on the distro major contributors, developers or surrounding OS community philosophy.

Most modern Linux distributions have at least 5 to 7 physical ttys. Some Linux distributions like Debian for instance as of time of writting this, had 7 active by default physical consoles.

Adding 3 more ttys in Debian / Ubuntu Linux is done by adding the following lines in /etc/inittab:

7:23:respawn:/sbin/getty 38400 tty7

8:23:respawn:/sbin/getty 38400 tty8

9:23:respawn:/sbin/getty 38400 tty9

On some Linux distributions like Fedora version 9 and newer ones, new ttys can no longer be added via /etc/inittab,as the RedHat guys changed it for some weird reason, but I guess this is too broad issue to discuss ….

In graphical environments ttys are called methaphorically "virtual". For instance in gnome-terminal or while connecting to a remote SSH server, a common tty naming would be /dev/pts/8 etc.

tty command in Linux and BSDs can be used to learn which tty, one is operating in.

Here is output from my tty command, issued on 3rd TTY (ALT+F3) on my notebook:

noah:~# tty

/dev/tty3

A tty cmd output from mlterm GUI terminal is like so:

hipo@noah:~$ tty/dev/pts/9

Now as mentioned few basic things on ttys I will proceed further to explain how I managed to:

a) Take screenshot of a plain text tty screen into .txt file format

b) take a (picture) JPG / PNG screenshot of my Linux TTY consoles content

1. Take screenshot of plain text tty screen into a plain (ASCII) .txt file:

To take a screenshot of tty1, tty2 and tty3 text consoles in a txt plain text format, cat + a standard UNIX redirect is all necessery:

noah:~# cat /dev/vcs1 > /home/hipo/tty1_text_screenshot.txt

noah:~# cat /dev/vcs2 > /home/hipo/tty2_text_screenshot.txt

noah:~# cat /dev/vcs3 > /home/hipo/tty3_text_screenshot.txt

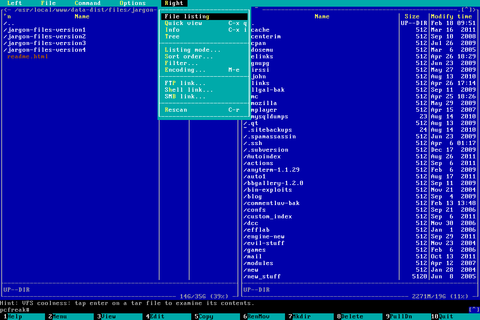

This will dump the text content of the console into the respective files, if however you try to dump an ncurses library like text interactive interfaces you will end up with a bunch of unreadable mess.

In order to read the produced text 'shots' onwards less command can be used …

noah:~# less /home/hipo/tty1_text_screenshot.txt

noah:~# less /home/hipo/tty2_text_screenshot.txt

noah:~# less /home/hipo/tty3_text_screenshot.txt

2. Take picture JPG / PNG snapshot of Linux TTY console content

To take a screenshot of my notebook tty consoles I had to first install a "third party program" snapscreenshot . There is no deb / rpm package available as of time of writting this post for the 4 major desktop linux distributions Ubuntu, Debian, Fedora and Slackware.

Hence to install snapscreenshot,I had to manually download the latest program tar ball source and compile e.g.:

noah:~# cd /usr/local/src

noah:/usr/local/src# wget -q http://bisqwit.iki.fi/src/arch/snapscreenshot-1.0.14.3.tar.bz2

noah:/usr/local/src# tar -jxvvvf snapscreenshot-1.0.14.3.tar.bz2

…

noah:/usr/local/src# cd snapscreenshot-1.0.14.3

noah:/usr/local/src/snapscreenshot-1.0.14# ./configure && make && make install

Configuring…

Fine. Done. make.

make: Nothing to be done for `all'.

if [ ! "/usr/local/bin" = "" ]; then mkdir –parents /usr/local/bin 2>/dev/null; mkdir /usr/local/bin 2>/dev/null; \

for s in snapscreenshot ""; do if [ ! "$s" = "" ]; then \

install -c -s -o bin -g bin -m 755 "$s" /usr/local/bin/"$s";fi;\

done; \

fi; \

if [ ! "/usr/local/man" = "" ]; then mkdir –parents /usr/local/man 2>/dev/null; mkdir /usr/local/man 2>/dev/null; \

for s in snapscreenshot.1 ""; do if [ ! "$s" = "" ]; then \

install -m 644 "$s" /usr/local/man/man"`echo "$s"|sed 's/.*\.//'`"/"$s";fi;\

done; \

fi

By default snapscreenshot command is made to take screenshot in a tga image format, this format is readable by most picture viewing programs available today, however it is not too common and not so standartized for the web as the JPEG and PNG.

Therefore to make the text console tty snapshot taken in PNG or JPEG one needs to use ImageMagick's convert tool. The convert example is also shown in snapscreenshot manual page Example section.

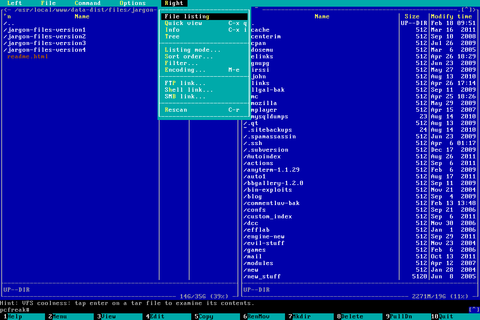

To take a .png image format screenshot of lets say Midnight Commander interactive console file manager running in console tty1, I used the command:

noah:/home/hipo# snapscreenshot -c1 -x1 > ~/console-screenshot.tga && convert ~/console-screenshot.tga console-screenshot.png

Note that you need to have read/write permissions to the /dev/vcs* otherwise the snapscreenshot will be unable to read the tty and produce an error:

hipo@noah:~/Desktop$ snapscreenshot -c2 -x1 > snap.tga && convert snap.tga snap.pngGeometry will be: 1x2Reading font…/dev/console: Permission denied

To take simultaneous picture screenshot of everything contained in all text consoles, ranging from tty1 to tty5, issue:

noah:/home/hipo# snapscreenshot -c5 -x1 > ~/console-screenshot.tga && convert ~/console-screenshot.tga console-screenshot.png

Here is a resized 480×320 pixels version of the original screenshot the command produces:

Storing a picture shot of the text (console) screen in JPEG (JPG) format is done analogously just the convert command output extension has to be changed to jpeg i.e.:

noah:/home/hipo# snapscreenshot -c5 -x1 > ~/console-screenshot.tga && convert ~/console-screenshot.tga console-screenshot.jpeg

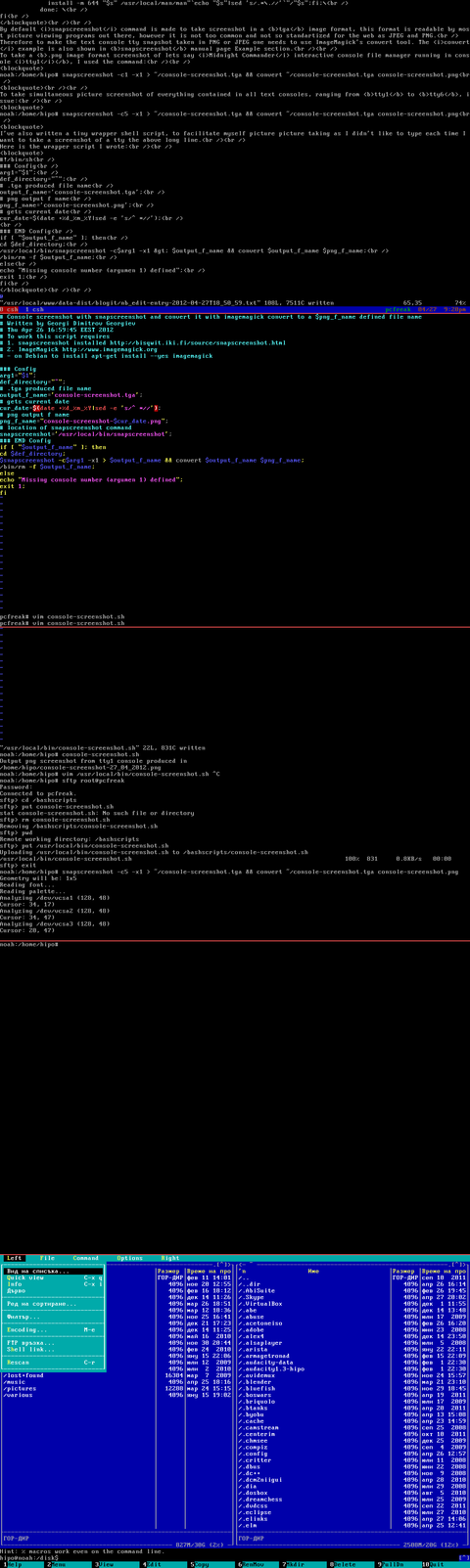

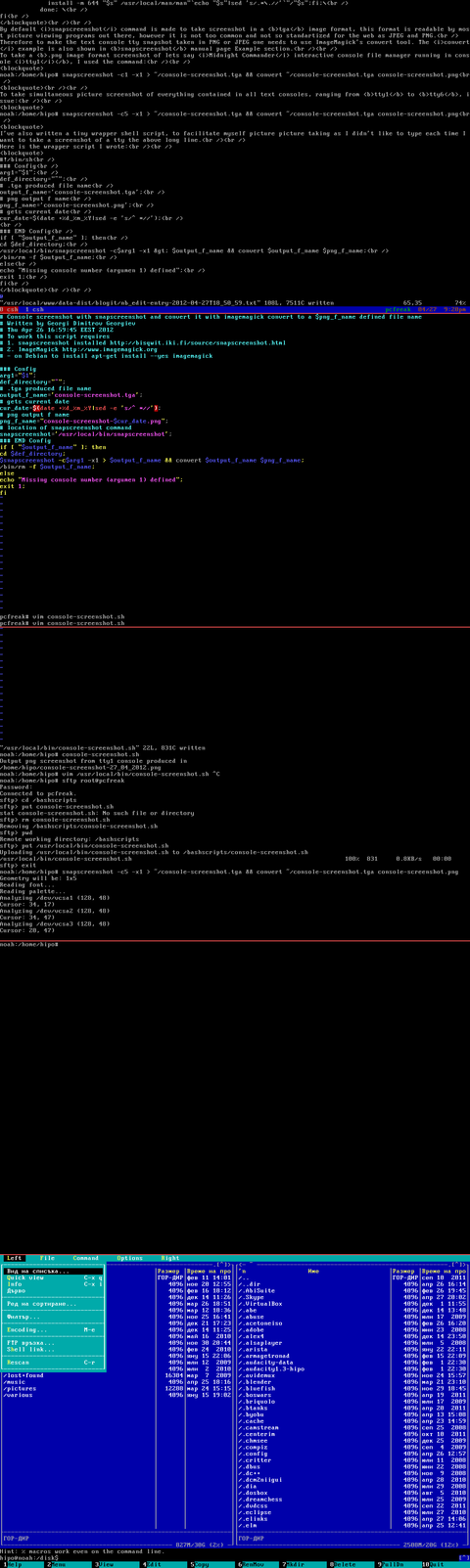

I've also written a tiny wrapper shell script, to facilitate myself picture picture taking as I didn't like to type each time I want to take a screenshot of a tty the above long line.

Here is the wrapper script I wrote:

#!/bin/sh

### Config

# .tga produced file name

output_f_name='console-screenshot.tga';

# gets current date

cur_date=$(date +%d_%m_%Y|sed -e 's/^ *//');

# png output f name

png_f_name="console-screenshot-$cur_date.png";

### END Config

snapscreenshot -c$arg1 -x1 > $output_f_name && convert $output_f_name $png_f_name;

echo "Output png screenshot from tty1 console produced in";

echo "$PWD/$png_f_name";

/bin/rm -f $output_f_name;

You can also download my console-screenshot.sh snapscreenshot wrapper script here

The script is quite simplistic to use, it takes just one argument which is the number of the tty you would like to screenshot.

To use my script download it in /usr/local/bin and set it executable flag:

noah:~# cd /usr/local/bin

noah:/usr/local/bin# wget -q https://www.pc-freak.net/~bshscr/console-screenshot.sh

noah:/usr/local/bin# chmod +x console-screenshot.sh

Onwards to use the script to snapshot console terminal (tty1) type:

noan:~# console-screenshot.sh

I've made also mirror of latest version of snapscreenshot-1.0.14.3.tar.bz2 here just in case this nice little program disappears from the net in future times.

Tags: Auto, BSD, community philosophy, consoles, Desktop, Draft, eyesight, fedora, few words, file, getty, Gnome, gnome desktop environment, gnu linux, graphical environment, graphical environments, inittab, instance, JPG, linux distributions, nbsp, noah, old habits, os community, phrase, png, quot, screen, screenshot, someone, TeleTYpewritter, terminal, text, time, ttys, Ubuntu, ubuntu linux, unix linux, work, work efficiency

Posted in Linux, System Administration | 3 Comments »

Monday, April 23rd, 2012 This post will be short as I'm starting to think long posts are mostly non-sense. Have you people all wondered of barcoding?

All world stores around the world have now barcoding. Barcode numbers regulations are being orchestrated by certain bodies, we people have no control over. Barcoding makes us dependent on technology as only technology can be used to read and store barcodes. It is technology that issues the barcodes. We have come to a point, where we humans trust more technology than our physical fellows. Trusting technology more than the close people to us is very dangerous. What if technology is not working as we expect it to?

What if there are hidden ways to control technology that we're not aware of?

Technology concepts are getting more and more crazy and abstract.

Thinks about the virtualization for a while. Virtualuzation is being praised loudly these days and everyone is turnning to it thinking it is cheap and realiable? The facts I've seen and the little of experience I had with it were way less than convicable.

Who came with this stupid idea, oh yes I remember IBM came with this insane idea some about 40 years ago … We had sanity for a while not massively adopting IBM's virtualization bulk ideas and now people got crazy again to use a number of virtualization technologies.

If you think for a while Virtualization is unreality (unexistence) of matter over another unreality. The programs that makes computers "runs" are not existent in practice, they only exist in some electricity form. Its just a sort of electric field if you think on it on a conceptual level …

As we trust all our lives nowdays on technology, how do we know this technological stored information is not altered by other fields, how we can be sure it always acts as we think it does and should? Was it tested for at least 40 years before adoption as any new advancement should be.

Well Of course not! Everything new is just placed in our society without too much thinking. Someone gives the money for production, someone else buys it and installs it and its ready to go. Or at least that's how the consumers thinks and we have become all consumers. This is a big LIE we're constantly being convinced in!

It is not ready to work, it is not tested and we don't know what the consequence of it will be!

Technology and Genetically Modified Food are not so different in this that they both can produce unexpected results in our lives. And they're already producing the bad fruits as you should have surely seen.

You can see more and more people are getting sick, more people go to doctor more people have to live daily with medication to live a miserable dishealthy I wouldn't say live but "poor" existence …

Next time they tell you new technology is good for you and will make your life better, Don't believe them! This is not necessery true.

Though todays technology can do you good, In my view the harm seriously exceeds the good.

Tags: adoption, Auto, Barcode, barcodes, close, conceptual level, consequence, control, course, Draft, electricity, eve, everyone, everything, existence, fellows, freedom, freedom technology, good, human freedom, information, insane idea, level, Modified, necessery, production, sanity, someone, stupid idea, technology, technology concepts, time, Trusting, unreality, virtualization

Posted in Everyday Life, News, Various | No Comments »

Friday, April 6th, 2012 I'm running WordPress for already 3 years or so now. Since some very long time. The first wordpress install, I can hardly remember but it something like wordpress 2.5 or wordpress 2.4

Since quite a long time my wordpress blog is powered by a number of plugins, which I regularly update, whenever new plugins pops up …

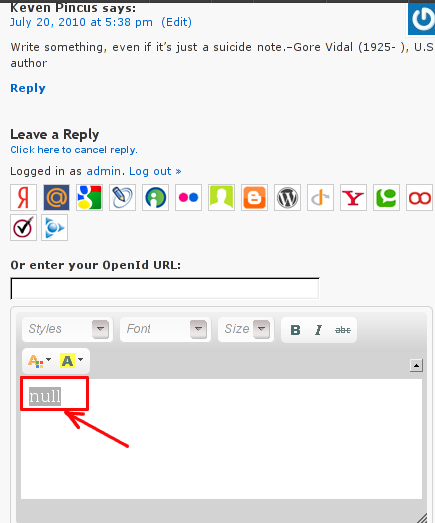

I haven't noticed most of the time problems during major WordPress platform updates or the update of the installed extensions. However, today while I tried to reply back to one of my blog comments, I've been shocked that, I couldn't.

Pointing at the the Comment Reply box and typing inside was impossible and a null message was stayed filled in the form:

To catch what was causing this weird misbehaving with the reply comments functionality, I grepped through my /var/www/blog/wp-content/plugins/* for the movecfm(null,0,1,null):

# cd /var/www/blog/wp-content/plugins

# grep -rli 'movecfm(null,0,1,null)' */*.php

wordpress-thread-comment/wp-thread-comment.php

I've taken the string movecfm(null,0,1,null) from the browser page source in in my Firefox by pressing – Ctrl+U).

Once I knew of the problem, I first tried commenting the occurances of the null fields in wp-thread-comment.php, but as there, were other troubles in commenting this and I was lazy to read the whole code, checked online if some other fellows experienced the same shitty null void javascript error and already someone pointed at a solution. In the few minutes search I was unable to find anyone who reported for this bug, but what I found is some user threads on wordpress.org mentioning since WordPress 2.7+ the wordpress-threaded-comments is obsolete and the functionality provided by the plugin is already provided by default in newer WPinstalls.

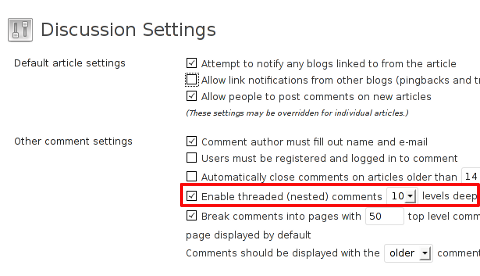

Hence in order to enable the threaded comments WordPress (embedded) reply functionality from within the wp-admin panel used:

Settings -> Discussions -> Enable Threaded (nested) comments (Tick)

You see there is also an option to define how many nested comments subcomments, can be placed per comment, the default was 5, but I thought 5 is a bit low so increased it to 10 comments reply possible per comment.

Finally, to prevent the default threaded comments to interfere with the WordPress Threaded Comments plugin, disabled the plugin through menus:

Plugins -> Active -> WordPress Thread Comments (Deactivate)

This solved the weird javascript null "bug" caused by wordpress-threaded-comments once and for all.

Hopefully onwards, my blog readers will not have issues with threaded Reply Comments.

Tags: admin panel, Auto, code, Comment, Ctrl, Draft, fellows, few minutes, Firefox, form, grep, long time, misbehaving, movecfm, null fields, null message, number, occurances, option, page, page source, php, phpI, platform, plugin, Plugins, quot, reply comments, rli, someone, something, thread, threads, tick, time, time problems, Wordpress, wordpress blog, wp

Posted in Web and CMS, Wordpress | 1 Comment »

Thursday, April 5th, 2012

I've been planning to run my own domain WHOIS service, for quite sime time and I always postpone or forgot to do it.

If you wonder, why would I need a (personal) web whois service, well it is way easier to use and remember for future use reference if you run it on your own URL, than wasting time in search for a whois service in google and then using some other's service to get just a simple DOMAIN WHOIS info.

So back to my post topic, I postpopned and postponed to run my own web whois, just until yesterday, whether I have remembered about my idea to have my own whois up and running and proceeded wtih it.

To achieve my goal I checked if there is free software or (open source) software that easily does this.

I know I can write one for me from scratch, but since it would have cost me some at least a week of programming and testing and I didn't wanted to go this way.

To check if someone had already made an easy to install web whois service, I looked through in the "ultimate source for free software" sourceforge.net

Looking for the "whois web service" keywords, displayed few projects on top. But unfortunately many of the projects sources was not available anymore from http://sf.net and the project developers pages..

Thanksfully in a while, I found a project called SpeedyWhois, which PHP source was available for download.

With all prior said about project missing sources, Just in case if SpeedyWhois source disappears in the future (like it probably) happened with, some of the other WHOIS web service projects, I've made SpeedyWhois mirror for download here

Contrary to my idea that installing the web whois service might be a "pain in the ass", (like is the case with so many free software php scripts and apps) – the installation went quite smoothly.

To install it I took the following 4 steps:

1. Download the source (zip archive) with wget

# cd /var/www/whois-service;

/var/www/whois-service# wget -q https://www.pc-freak.net/files/speedywhois-0.1.4.zip

2. Unarchive it with unzip command

/var/www/whois-service# unzip speedywhois-0.1.4.zip

…

3. Set the proper DNS records My NS are using Godaddy, so I set my desired subdomain record from their domain name manager.

4. Edit Apache httpd.conf to create VirtualHost

This step is not mandatory, but I thought it is nice if I put the whois service under a subdomain, so add a VirtualHost to my httpd.conf

The Virtualhost Apache directives, I used are:

<VirtualHost *:80>

ServerAdmin hipo_aT_www.pc-freak.net

DocumentRoot /var/www/whois-service

ServerName whois.www.pc-freak.net

<Directory /var/www/whois-service

AllowOverride All

Order Allow,Deny

Allow from All

</Directory>

</VirtualHost>

Onwards to take effect of new Webserver configs, I did Apache restart

# /usr/local/etc/rc.d/apache2 restart

Whenever I have some free time, maybe I will work on the code, to try to add support for logging of previous whois requests and posting links pointing to the previous whois done via the web WHOIS service on the main whois page.

One thing that I disliked about how SpeedyWHOIS is written is, if there is no WHOIS information returned for a domain request (e.g.) a:

# whois domainname.com

returns an empty information, the script doesn't warn with a message there is no WHOIS data available for this domain or something.

This is not so important as this kind of behaviour of 'error' handling can easily be changed with minimum changes in the php code.

If you wonder, why do I need the web whois service, the answer is it is way easier to use.

I don't have more time to research a bit further on the alternative open source web whois services, so I would be glad to hear from anyone who tested other web whois service that is free comes under a FOSS license.

In the mean time, I'm sure people with a small internet websites like mine who are looking to run their OWN (personal) whois service SpeedyWHOIS does a great job.

Tags: Auto, code, contrary, domain, domain web, domain whois, download, Draft, free software, goal, google, idea, info, information, installation, minute, mirror, nbsp, open source software, pain in the ass, personal domain, personal web, programming, project, project developers, quot, scratch, scripts, Search, service projects, sime, software, software php, someone, SpeedyWHOIS, Thanksfully, time, top, topic, Virtualhost, wasting, wasting time, way, web service, web whois, wget, while, whois, whois web, yesterday

Posted in Everyday Life, System Administration, Web and CMS | 5 Comments »

Monday, March 12th, 2012

One of the WordPress websites hosted on our dedicated server produces all the time a wp-cron.php 404 error messages like:

xxx.xxx.xxx.xxx - - [15/Apr/2010:06:32:12 -0600] "POST /wp-cron.php?doing_wp_cron HTTP/1.0

I did not know until recently, whatwp-cron.php does, so I checked in google and red a bit. Many of the places, I've red are aa bit unclear and doesn't give good exlanation on what exactly wp-cron.php does. I wrote this post in hope it will shed some more light on wp-config.php and how this major 404 issue is solved..

So

what is wp-cron.php doing?

- wp-cron.php is acting like a cron scheduler for WordPress.

- wp-cron.php is a wp file that controls routine actions for particular WordPress install.

- Updates the data in SQL database on every, request, every day or every hour etc. – (depending on how it's set up.).

- wp-cron.php executes automatically by default after EVERY PAGE LOAD!

- Checks all pending comments for spam with Akismet (if akismet or anti-spam plugin alike is installed)

- Sends all scheduled emails (e.g. sent a commentor email when someone comments on his comment functionality, sent newsletter subscribed persons emails etc.)

- Post online scheduled articles for a day and time of particular day

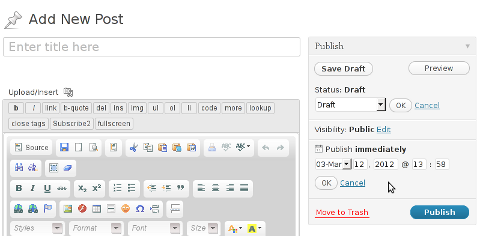

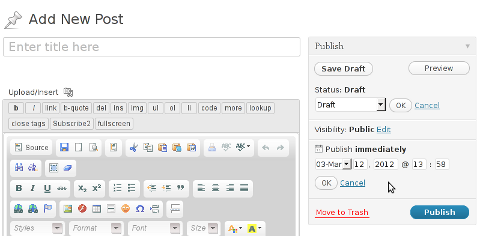

Suppose you're writting a new post and you want to take advantage of WordPress functionality to schedule a post to appear Online at specific time:

The Publish Immediately, field execution is being issued on the scheduled time thanks to the wp-cron.php periodic invocation.

Another example for wp-cron.php operation is in handling flushing of WP old HTML Caches generated by some wordpress caching plugin like W3 Total Cache

wp-cron.php takes care for dozens of other stuff silently in the background. That's why many wordpress plugins are depending heavily on wp-cron.php proper periodic execution. Therefore if something is wrong with wp-config.php, this makes wordpress based blog or website partially working or not working at all.

Our company wp-cron.php errors case

In our case the:

212.235.185.131 – – [15/Apr/2010:06:32:12 -0600] "POST /wp-cron.php?doing_wp_cron HTTP/1.0" 404

is occuring in Apache access.log (after each unique vistor request to wordpress!.), this is cause wp-cron.php is invoked on each new site visitor site request.

This puts a "vain load" on the Apache Server, attempting constatly to invoke the script … always returning not found 404 err.

As a consequence, the WP website experiences "weird" problems all the time. An illustration of a problem caused by the impoper wp-cron.php execution is when we are adding new plugins to WP.

Lets say a new wordpress extension is download, installed and enabled in order to add new useful functioanlity to the site.

Most of the time this new plugin would be malfunctioning if for example it is prepared to add some kind of new html form or change something on some or all the wordpress HTML generated pages.

This troubles are result of wp-config.php's inability to update settings in wp SQL database, after each new user request to our site.

So the newly added plugin website functionality is not showing up at all, until WP cache directory is manually deleted with rm -rf /var/www/blog/wp-content/cache/…

I don't know how thi whole wp-config.php mess occured, however my guess is whoever installed this wordpress has messed something in the install procedure.

Anyways, as I researched thoroughfully, I red many people complaining of having experienced same wp-config.php 404 errs. As I red, most of the people troubles were caused by their shared hosting prohibiting the wp-cron.php execution.

It appears many shared hostings providers choose, to disable the wordpress default wp-cron.php execution. The reason is probably the script puts heavy load on shared hosting servers and makes troubles with server overloads.

Anyhow, since our company server is adedicated server I can tell for sure in our case wordpress had no restrictions for how and when wp-cron.php is invoked.

I've seen also some posts online claiming, the wp-cron.php issues are caused of improper localhost records in /etc/hosts, after a thorough examination I did not found any hosts problems:

hipo@debian:~$ grep -i 127.0.0.1 /etc/hosts

127.0.0.1 localhost.localdomain localhost

You see from below paste, our server, /etc/hosts has perfectly correct 127.0.0.1 records.

Changing default way wp-cron.php is executed

As I've learned it is generally a good idea for WordPress based websites which contain tens of thousands of visitors, to alter the default way wp-cron.php is handled. Doing so will achieve some efficiency and improve server hardware utilization.

Invoking the script, after each visitor request can put a heavy "useless" burden on the server CPU. In most wordpress based websites, the script did not need to make frequent changes in the DB, as new comments in posts did not happen often. In most wordpress installs out there, big changes in the wordpress are not common.

Therefore, a good frequency to exec wp-cron.php, for wordpress blogs getting only a couple of user comments per hour is, half an hour cron routine.

To disable automatic invocation of wp-cron.php, after each visitor request open /var/www/blog/wp-config.php and nearby the line 30 or 40, put:

define('DISABLE_WP_CRON', true);

An important note to make here is that it makes sense the position in wp-config.php, where define('DISABLE_WP_CRON', true); is placed. If for instance you put it at the end of file or near the end of the file, this setting will not take affect.

With that said be sure to put the variable define, somewhere along the file initial defines or it will not work.

Next, with Apache non-root privileged user lets say www-data, httpd, www depending on the Linux distribution or BSD Unix type add a php CLI line to invoke wp-cron.php every half an hour:

linux:~# crontab -u www-data -e

0,30 * * * * cd /var/www/blog; /usr/bin/php /var/www/blog/wp-cron.php 2>&1 >/dev/null

To assure, the php CLI (Command Language Interface) interpreter is capable of properly interpreting the wp-cron.php, check wp-cron.php for syntax errors with cmd:

linux:~# php -l /var/www/blog/wp-cron.php

No syntax errors detected in /var/www/blog/wp-cron.php

That's all, 404 wp-cron.php error messages will not appear anymore in access.log! 🙂

Just for those who can find the root of the /wp-cron.php?doing_wp_cron HTTP/1.0" 404 and fix the issue in some other way (I'll be glad to know how?), there is also another external way to invoke wp-cron.php with a request directly to the webserver with short cron invocation via wget or lynx text browser.

– Here is how to call wp-cron.php every half an hour with lynxPut inside any non-privileged user, something like:

01,30 * * * * /usr/bin/lynx -dump "http://www.your-domain-url.com/wp-cron.php?doing_wp_cron" 2>&1 >/dev/null

– Call wp-cron.php every 30 mins with wget:

01,30 * * * * /usr/bin/wget -q "http://www.your-domain-url.com/wp-cron.php?doing_wp_cron"

Invoke the wp-cron.php less frequently, saves the server from processing the wp-cron.php thousands of useless times.

Altering the way wp-cron.php works should be seen immediately as the reduced server load should drop a bit.

Consider you might need to play with the script exec frequency until you get, best fit cron timing. For my company case there are only up to 3 new article posted a week, hence too high frequence of wp-cron.php invocations is useless.

With blog where new posts occur once a day a script schedule frequency of 6 up to 12 hours should be ok.

Tags: akismet, Auto, caches, checks, commentor, cr, cron, daySuppose, dedicated server, doesn, dozens, Draft, email, error messages, execution, exlanation, file, google, HTML, HTTP, invocation, localhost, nbsp, newsletter, operation, periodic execution, php, plugin, quot, request, scheduler, someone, something, spam, SQL, time, time thanks, Wordpress, wordpress plugins, wp

Posted in System Administration, Web and CMS, Wordpress | 3 Comments »

Monday, July 9th, 2007 The day went faster than normal other days. I wake up in the morning went to Church on Liturgy.Then I went home watched some Cartoon Network. Later I decided going to my uncle to read himthe bible for some time, but he was not home I take a watermelon from the local market for mygrandmother. It’s nice to see somebody being happy about something :]. Later i went to my uncle.It’s sad to see someone like in his condition :[. I really want he to get better I read himfrom the Bible The Holy Evangelic text of Luka, I hope at least he has understood somethingfrom the Evangelical Text. Habib called home from London later, this was a real joyHabib is such a nice guy i really want God to bless him in everyhing for he desirves.We spake a lot about the life in England. The bad conditions there, the low paid job,how hard he is living there. About how we miss as friends, about some close friends.After the conversation I decided to go out. I first went to the Mino’s coffee. There weresome people there but I got angry at the non-sense conversations and decided to go to theFountain actually there was almost the same. Later I Toto and Mitko drinked beer in thecity park. And I went home … After the usual Evening Orthodox Prayers I will go to bed in 20 or 30 minutes.END—–

Tags: Beer, bible, cartoon, cartoon network, close, close friends, coffee, conversation, conversations, end, Evangelic, Evening, god, habib, himfrom, home, job, life, life in england, liturgy, local market, london, luka, Mino, Mitko, mygrandmother, nice guy, orthodox prayers, someone, something, text, thecity, time, toto, watermelon

Posted in Everyday Life | No Comments »

Friday, April 11th, 2008 Yesterday and today we had Management Games and Theathre Games with Joop Vinke.At the management game we play a sort of Human Resources Management game. All the students are devided into groups and we play a simulator game. We had to manage a company. First we setup our 2 year goals and then we play the game on quarters (6 quarters). Every quarter we have to made some managerial decisions (invest money into different stuff, hire personnel, promote ppl etc.).

Basicly the company consists of 660 employees, there are 5 levels in the company starting from 1 where there is unqualified specialists and 5 which are the top management.

When we make our choices then all this data is inputed into a computer which gives us some feedback which helps us in taking the decisions for the next quarter. At the meantime Vinke organizes fun games to entertain us and make us feel comfortable with him and through this games he tries to show us basic concepts in business. The last two days I really enjoyed.

Today the game that impressed me the most was called

“The Werewolves from Wackedan”. Basicly it’s a strategic game with roles. In it you’ve got a bunch of ppl who play different roles, 3 of them are werewolves, others are citizens others are ppl who have special abilities to foresee who are the werewolves.

We had cards in front of us turned back to prevent others except us to see the cards. Some of the cards are citizens and ppl who belong to the citizens other 3 are werewolves.

Every night the werewolf kills a person (by selecting somebody from the crowd, when they sleep), because the werewolves are out at night when everybody sleeps. At the morning citizens awake and one of their friends is dead so they try to revenge by pointing someone to be killed (it may be a citizen again it may be a werewolf).

At the end only werewolves or citizens should servive 🙂 It was a big fun today to play this simple game. At the end of the day at 18:00 we had a session of the so called Theathre/Games. Theathre Games include different entertaining games which are designed to improve our communication skills and teach us to act like an actors plus they are pretty entertaining 🙂 That’s all thanks to God everything seems to run smoothly around my life. Except my health I’m still having some health issues although I can say I have an improvement I am not still healed and I still drink herbs.

At 20:00 I was out with Narf and we went to the fountain a little later Kimmo and Yavor joined us and we spend some time their. Well that’s most of the day at night I went to my grandma just to see how she is doing and now I write this post tomorrow the Management Game continues at 09:00. So probably after few minutes I’ll go for the night prayers and then I’ll go to sleep. END—–

Tags: big fun, choices, citizen, citizens, company, crowd, end, everybody, everything, feedback, fountain, fun, fun games, grandma, health, human resources management, Joop, management game, management games, managerial decisions, meantime, Narf, person, ppl, quarters, quot, revenge, session, simple game, sleep, someone, special abilities, strategic game, stuff, time, top management, werewolf, werewolves, year

Posted in Business Management, Everyday Life | 3 Comments »

Thursday, December 8th, 2011

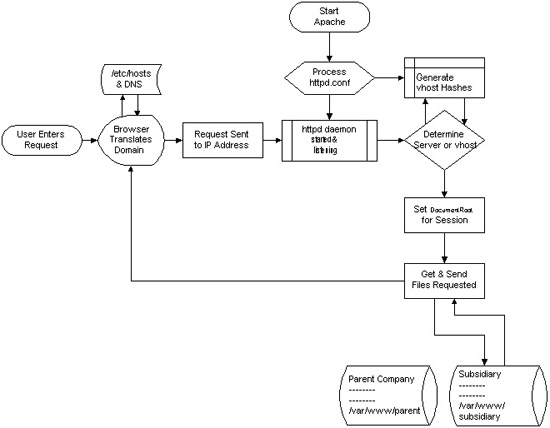

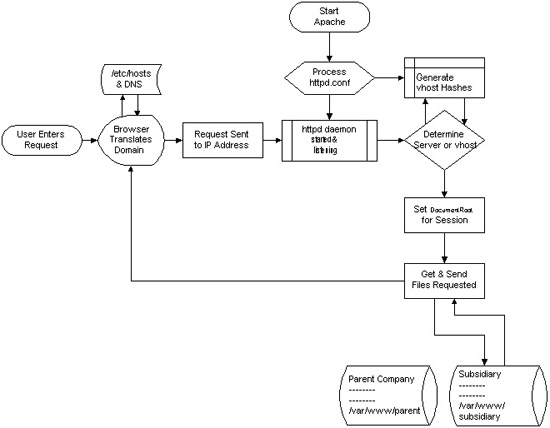

I decided to start this post with this picture I found on onlamp.com article called “Simplify Your Life with Apache VirtualHosts .I put it here because I thing it illustrates quite well Apache’s webserver internal processes. The picture gives also a good clue when Virtual Hosts gets loaded, anways I’ll go back to the main topic of this article, hoping the above picture gives some more insight on how Apache works.;

Here is how to list all the enabled virtualhosts in Apache on Debian GNU / Linux serving pages:

server:~# /usr/sbin/ apache2ctl -S

VirtualHost configuration:

wildcard NameVirtualHosts and _default_ servers:

*:* is a NameVirtualHost

default server exampleserver1.com (/etc/apache2/sites-enabled/000-default:2)

port * namevhost exampleserver2.com (/etc/apache2/sites-enabled/000-default

port * namevhost exampleserver3.com (/etc/apache2/sites-enabled/exampleserver3.com:1)

port * namevhost exampleserver4.com (/etc/apache2/sites-enabled/exampleserver4.com:1)

...

Syntax OK

The line *:* is a NameVirtualHost, means the Apache VirtualHosts module will be able to use Virtualhosts listening on any IP address (configured on the host), on any port configured for the respective Virtualhost to listen on.

The next output line:

port * namevhost exampleserver2.com (/etc/apache2/sites-enabled/000-default

Shows requests to the domain on any port will be accepted (port *) by the webserver as well as indicates the <VirtualHost> in the file /etc/apache2/sites-enabled/000-default:2 is defined on line 2 (e.g. :2).

To see the same all enabled VirtualHosts on FreeBSD the command to be issued is:

freebsd# pcfreak# /usr/local/sbin/httpd -S VirtualHost configuration:

wildcard NameVirtualHosts and _default_ servers:

*:80 is a NameVirtualHost

default server www.pc-freak.net (/usr/local/etc/apache2/httpd.conf:1218)

port 80 namevhost www.pc-freak.net (/usr/local/etc/apache2/httpd.conf:1218)

port 80 namevhost pcfreak.afraid.org (/usr/local/etc/apache2/httpd.conf:1353)

...

Syntax OK

On Fedora and the other Redhat Linux distributions, the apache2ctl -S should be displaying the enabled Virtualhosts.

One might wonder, what might be the reason for someone to want to check the VirtualHosts which are loaded by the Apache server, since this could be also checked if one reviews Apache / Apache2’s config file. Well the main advantage is that checking directly into the file might sometimes take more time, especially if the file contains thousands of similar named virtual host domains. Another time using the -S option is better would be if some enabled VirtualHost in a config file seems to not be accessible. Checking directly if Apache has properly loaded the VirtualHost directives ensures, there is no problem with loading the VirtualHost. Another scenario is if there are multiple Apache config files / installs located on the system and you’re unsure which one to check for the exact list of Virtual domains loaded.

Tags: apache, apache2, clue, com, config, configured, ctl, debian gnu, default port, default server, exampleserver, file, freak, freebsd, gnu linux, host, hosts, insight, ip address, life, Linux, lt, namevhost, NameVirtualHosts, onlamp, option, pcfreak, quot, reason, sbin, server pc, servers, someone, syntax, time, topic, Virtual, virtual hosts, Virtualhost, virtualhost configuration, VirtualHosts

Posted in Linux, System Administration | No Comments »

Saturday, March 3rd, 2012 I've recently had to make a copy of one /usr/local/nginx directory under /usr/local/nginx-bak, in order to have a working copy of nginx, just in case if during my nginx update to new version from source mess ups.

I did not check the size of /usr/local/nginx , so just run the usual:

nginx:~# cp -rpf /usr/local/nginx /usr/local/nginx-bak

...

Execution took more than 20 seconds, so I check the size and figured out /usr/local/nginx/logs has grown to 120 gigabytes.

I didn't wanted to extra load the production server with copying thousands of gigabytes so I asked myself if this is possible with normal Linux copy (cp) command?. I checked cp manual e.g. man cp, but there is no argument like –exclude or something.

Even though the cp command exclude feature is not implemented by default there are a couple of ways to copy a directory with exclusion of subdirectories of files on G / Linux.

Here are the 3 major ones:

1. Copy directory recursively and exclude sub-directories or files with GNU tar

Maybe the quickest way to copy and exclude directories is through a littke 'hack' with GNU tar

nginx:~# mkdir /usr/local/nginx-new;

nginx:~# cd /usr/local/nginx#

nginx:/usr/local/nginx# tar cvf - \. --exclude=/usr/local/nginx/logs/* \

| (cd /usr/local/nginx-new; tar -xvf - )

Copying that way however is slow, in my case it fits me perfectly but for copying large chunks of data it is better not to use pipe and instead use regular tar operation + mv

# cd /source_directory

# tar cvf test.tar --exclude=dir_to_exclude/*\--exclude=dir_to_exclude1/* . \

# mv test.tar /destination_directory

# cd /destination# tar xvf test.tar

2. Copy folder recursively excluding some directories with rsync

P>eople who has experience with rsync , already know how invaluable this tool is. Rsync can completely be used as for substitute=de.a# rsync -av –exclude='path1/to/exclude' –exclude='path2/to/exclude' source destination

This example, can also be used as a solution to my copy nginx and exclude logs directory casus like so:

nginx:~# rsync -av --exclude='/usr/local/nginx/logs/' /usr/local/nginx/ /usr/local/nginx-new

As you can see for yourself, this is a way more readable for the tar, however it will not work on servers, where rsync is not installed and it is unusable if you have to do operations as a regular users on such for that case surely the GNU tar hack is more 'portable' across systems.

rsync has also Windows version and therefore, the same methodology should be working on MS Windows and good for batch scripting.

I've not tested it myself, yet as I've never used rsync on Windows, if someone has tried and it works pls drop me a short msg in comments.

3. Copy directory and exclude sub directories and files with find

Find in collaboration with cp can also be used to exclude certain directories while copying. Actually this method is better than the GNU tar hack and surely more efficient. For machines, where rsync is not installed it is just a perfect way to copy files from location to location, while excluding some directories, here is an example use of find and cp, for the above nginx case:

nginx:~# cd /usr/local/nginx

nginx:~# mkdir /usr/local/nginx

nginx:/usr/local/nginx# find . -type d \( ! -name logs \) -print -exec cp -rpf '{}' /usr/local/nginx-bak \;

This will find all directories inside /usr/local/nginx with find command print them on the screen, then execute recursive copy over each found directory and copy to /usr/local/nginx-bak

This example will work fine in the nginx case because /usr/local/nginx does not contain any files but only sub-directories. In other occwhere the directory does contain some files besides sub-directories the files had to also be copied e.g.:

# for i in $(ls -l | egrep -v '^d'); do\

cp -rpf $i /destination/directory

This will copy the files from source directory (for instance /usr/local/nginx/my_file.txt, /usr/local/nginx/my_file1.txt etc.), which doesn't belong to a subdirectory.

The cmd expression:

# ls -l | egrep -v '^d'

Lists only the files while excluding all the directories and in a for loop each of the files is copied to /destination/directory

If someone has better ideas, please share with me 🙂

Tags: argument, Auto, copy, copy cp, copy directory, copy folder, copying, destination directory, directory cd, Draft, eople, exclusion, feature, file, g man, gigabytes, gnu linux, gnu tar, linux linux, littke, location, man cp, mess, mess ups, msg, operation, p eople, production, production server, recursively, rpf, rsync, someone, something, source directory, subdirectories, substitute, tar cvf, tar xvf, test, tool, ups, xvf

Posted in Linux, Linux and FreeBSD Desktop, System Administration | 2 Comments »

Fix Null error in WordPress comment reply with wordpress-threaded-comments plugin enabled

Friday, April 6th, 2012I'm running WordPress for already 3 years or so now. Since some very long time. The first wordpress install, I can hardly remember but it something like wordpress 2.5 or wordpress 2.4

Since quite a long time my wordpress blog is powered by a number of plugins, which I regularly update, whenever new plugins pops up …

I haven't noticed most of the time problems during major WordPress platform updates or the update of the installed extensions. However, today while I tried to reply back to one of my blog comments, I've been shocked that, I couldn't.

Pointing at the the Comment Reply box and typing inside was impossible and a null message was stayed filled in the form:

To catch what was causing this weird misbehaving with the reply comments functionality, I grepped through my /var/www/blog/wp-content/plugins/* for the movecfm(null,0,1,null):

# cd /var/www/blog/wp-content/plugins

# grep -rli 'movecfm(null,0,1,null)' */*.php

wordpress-thread-comment/wp-thread-comment.php

I've taken the string movecfm(null,0,1,null) from the browser page source in in my Firefox by pressing – Ctrl+U).

Once I knew of the problem, I first tried commenting the occurances of the null fields in wp-thread-comment.php, but as there, were other troubles in commenting this and I was lazy to read the whole code, checked online if some other fellows experienced the same shitty null void javascript error and already someone pointed at a solution. In the few minutes search I was unable to find anyone who reported for this bug, but what I found is some user threads on wordpress.org mentioning since WordPress 2.7+ the wordpress-threaded-comments is obsolete and the functionality provided by the plugin is already provided by default in newer WPinstalls.

Hence in order to enable the threaded comments WordPress (embedded) reply functionality from within the wp-admin panel used:

Settings -> Discussions -> Enable Threaded (nested) comments (Tick)

You see there is also an option to define how many nested comments subcomments, can be placed per comment, the default was 5, but I thought 5 is a bit low so increased it to 10 comments reply possible per comment.

Finally, to prevent the default threaded comments to interfere with the WordPress Threaded Comments plugin, disabled the plugin through menus:

Plugins -> Active -> WordPress Thread Comments (Deactivate)This solved the weird javascript null "bug" caused by wordpress-threaded-comments once and for all.

Hopefully onwards, my blog readers will not have issues with threaded Reply Comments.

Tags: admin panel, Auto, code, Comment, Ctrl, Draft, fellows, few minutes, Firefox, form, grep, long time, misbehaving, movecfm, null fields, null message, number, occurances, option, page, page source, php, phpI, platform, plugin, Plugins, quot, reply comments, rli, someone, something, thread, threads, tick, time, time problems, Wordpress, wordpress blog, wp

Posted in Web and CMS, Wordpress | 1 Comment »