Recently for some UNKNOWN to ME reasons New Pc-Freak computer hardware crashed 2 times over last 2 weeks time, this was completely unexpected especially after the huge hardware upgrade of the system. Currently the system is equipped with 8GB of memory a a nice Dual Core Intel CPU running on CPU speed of 6 GHZ, however for completely unknown to me reasons it continued experience outages and mysteriously hang ups ….

So far I didn’t have the time to put some few documentary pictures of PC hardware on which this blog and the the rest of sites and shell access is running so I will use this post to do this as well:

Below I include a picture for sake of History preservation 🙂 of Old Pc-Freak hardware running on IBM ThinkCentre (1GB Memory, 3Ghz Intel CPU and 80 GB HDD):

The old FreeBSD powered Pc-Freak IBM ThinkCentre

Here are 2 photos of new hardware host running on Lenovo ThinCentre Edge:

My guess was those unsual “freezes” were caused due to momentum overloads of WebServer or MySQL db.

Actually the Linux Squeeze installed was “stupidly” installed with a 32 bit Debian Linux (by me). I did that stupidity, just few weeks ago, when I moved every data content (SQL, Apache config, Qmail accounts, Shell accounts etc. etc.) from old Pc-Freak computer to the new purchased one.

After finding out I have improperly installed (being in a hurry) – 32 Bit system, I’ve Upgrade only the system 32 bit kernel hich doesn’t support well more than 4GB to an amd64 one supporting up to 64GB of memory – if interested I’ve prior blogged on this here.

Thanks to my dear friend Alexander (who in this case should have a title similar to Alexander the Great – for he did great and not let me down being there in such a difficult moment for me spending from his personal time helping me bringing up Pc-Freak.Net. To find a bit more about Alex you might check his personal home page hosted on www.pc-freak.net too here 🙂

I don’t exaggerate, really Alex did a lot for me and this is maybe the 10th time I disturb him over the last 2 years, so I owe him a lot ! Alex – I really owe you a lot bro – thanks for your great efforts; thanks for going home 3 times for just to days, thanks for recording Rescue CDs, staying at home until 2 A.M. and really thanks for all!!

Just to mention again, to let me via Secure Shell, Alex burned and booted for me Debian Linux Rescue Live CD downloaded from linke here.

This time I messed my tiny little home hosted server, very very badly!!! Those of you who might read my blog or have SSH accounts on Pc-Freak.NET, already should have figured out Pc-Freak.net was down for about 2 days time (48 HOURS!!!!).

The exact “official” downtime period was:

Saturday OCTOBER 13!!!( from around 16:00 o’clock – I’m not fatalist but this 13th was really a harsh date) until Monday 15-th of Oct (14:00h) ….

I’m completely in charge and responsible for the 2 days down time, and honestly I had one of my worst life days, so far. The whole SHIT story occurred after I attempted to do a 32 bit (i386) to AMD64 (64 bit) system packages deb binary upgrade; host is installed to run Debian Squeeze 6.0.5 ….; Note to make here is Officially according to documentation package binary upgrades from 32 bit to 64 arch Debian Linux are not possible!. Official debian.org documentation recommended for 32 bit to 64 packs update (back up all system existent data) and do a clean CD install / re-install, over the old installed 32 bit version. However ignoring the official documentation, being unwise and stubborn, I decided to try to anyways upgrading using those Dutch person guide … !!!

I’ve literally followed above Dutch guy, steps and instead of succeeding 64 bit update, after few of the steps outlined in his article the node completely (libc – library to which all libraries are linked) broke up. Then trying to fix those amd64 libc, I tried re-installing coreutils package part of base-files – basis libs and bins deb;

I’ve followed few tutorials (found on the next instructing on the 32bit to 64 bit upgrade), combined chunks from them, reloaded libc in a live system !!! (DON’T TRY THAT EVER!); then by mistake during update deleted coreutils package!!!, leaving myself without even essential command tools like /bin/ls , /bin/cp etc. etc. ….. And finally very much (in my fashion) to make the mess complete I decided to restart the system in those state without /bin/ls and all essential /bins ….

Instead of making things better I made the system completely un-bootable 🙁

Well to conclude it, here I am once again I stupid enough not to follow the System Administrator Golden Rule of Thumb:

– IF SOMETHING WORKS DON’T TOUCH IT !!!!!!!!! EVER !!!!, cause of my stubbornness I screw it up all so badly.

I should really take some moral from this event, as similar stories has happened to me long time ago on few Fedora Linux hosts on productive Web servers, and I went through all this upgrades nightmare but apparently learned nothing from it. My personal moral out of the story is I NEVER LEARN FROM MY MISTAKES!!! PFFF …

I haven’t had days like this in which I was totally down, for a very long time, really I fell in severe desperation and even depressed, after un-abling to access in any way Pc-Freak.NET, I even thought it will be un-fixable forever and I will loose all data on the host and this deeply saddened me.

Here is good time to Give thanks to Svetlana (Sveta) (A lovely kind, very beautiful Belarusian lady 🙂 who supported me and Sali and his wife Mimi (Meleha) who encouraged and lived up my hardly bearable tempper when angry or/and sad :)). Lastly I have to thank a lot to Happy (Indian Lady whose whose my dear indian brother Jose met me with in Skype earlier. Happy encouraged me in many times of trouble in Skype, giving me wise advices not to take all so serious and be more confied, also most importantly Happy helped me with her prayers …. Probably many others to which I complained about situation helped with their prayers too – Thanks to to God and to all and let God return them blessing according to their good prayers for me !

Some people who know me well might know Pc-Freak.Net Linux host has very sentimental value for me and even though it doesn’t host too much websites (only 38 sites not so important ones ), still it is very bad to know your “work input” which you worked on in your spare time over the last 3 years (including my BLOG – blogging almost every day for last 3 yrs, the public shell SSH access for my Friends, custom Qmail Mail server / POP3 and IMAP services / SQL data etc. might not be lost forever. Or in more positive better scenario could be down for huge period of time like few months until I go home and fix it physically on phys terminal …

All this downtime mess occurred due to my own inability to estimate properly update risks (obviously showing how bad I’m in risk management …). Whole “down time story”” proofed me only, I have a lot to learn in life and worry less about things ….

It also show me how much of an “idol”, one can make some kind of object of daily works as www.pc-freak.net become to me. Good thing is I at least realize my blog has with time, become like an idol to me as I’m mostly busy with it and in a way too much worrying for it makes me fill up in the gap “worshipping an idol” and each Christian knows pretty well, God tells us: “Do not have other Gods besides me”.

I suppose this whole mess was allowed to happen by God’s Great Mercy to show me how weak my faith is, and how often I put my personal interest on top of real important things. Whole situation teached me, once again I easy fall in spirit and despair; hope it is a lesson given to me I will learn from and next time I will be more solid in critical situation …

Here are some of my thoughts on the downtime, as I felt obliged to express them too;

Whole problem severeness (in my mind), would not be so bad if I only had some kind of physical access to System terminal. However as I’m currently in Arnhem Holland 6500 kilometers away from the Server (hosted in Dobrich, Bulgaria), don’t have access to IPKVM or any kind of web management to act on the physical keyboard input, my only option was to ask Alex go home and tell him act as a pro tech support which though I repeat myself I will say again, he did great.

What made this whole downtime mess even worser in my distorted vision on situation is, fact; I don’t know people who are Linux GURUs who can deal with the situation and fix the host without me being physically there, so this even exaggerated me worrying it even more …

I’m relatively poor person and I couldn’t easily afford to buy a flight ticket back to Bulgaria which in best case as I checked today in WizzAir.com’s website would costs me about 90EUR (at best – just one way flight ticket ) to Sofia and then more 17 euro for bus ticket from Sofia to Dobrich; Meaning whole repair costs would be no less than 250 EUR with prince included train ticket expenses to Eindhoven.);

Therefore obviously traveling back to fix it on physical console was not an option.

Some other options I considered (as adviced by Sveta), was hiring some (pro sysadm to fix the host) – here I should say it is almost impossible to find person in Dobrich who has the Linux knowledge to fix the system; moreover Linux system administrators are so expensive these days. Most pro sysadmins will not bother to fix the host if not being paid hour – fee of at least 40 / 50 EUR. Obviously therefore hiring a professional UNIX system adminsitrator to solve my system issues would have cost approximately equal to travel expenses of myself, if going physically to the computer; spend the same 5 hours fixing it and loose at least 2 or 3 more days in traveling back to Holland …..

Also it is good to mention on the system, I’ve done a lot of custom things, which an external hired person will be hardly possible to deal with, without my further interference and even if I had hired someone to fix it I would have spend at least 50 euro on Phone Bills to explain specifics ….

As I was in the shit, I should thanks in this post also (on first place) to MY DEAR SISTER Stanimira !!! My sis was smart enough to call my dear friend Alexander (Alex), who as always didn’t fail me – for a 3rd time BIG THANKS ALEX !, spending time and having desire to help me at this critical times. I instructed him as a first step to try loading on the unbootable linux, the usual boot-able Debian Squeeze Install LiveCD….

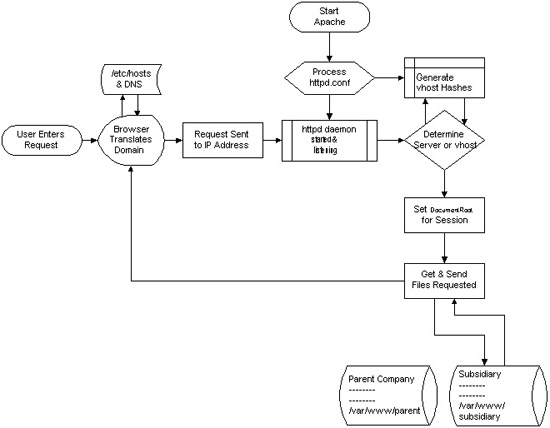

So far so good, but unfortunately with this bootable CD, the problem is Debian Setup (Install) CD does not come equipped with SSHD (SSH Server) by default and hence I can’t just get in via Internet;

I’ve searched through the net if there is a way to make the default Debian Install CD1 (.iso) recovery CD to have openssh-server enabled, but couldn’t find anyone explainig how ?? If there is some way and someone reading this post knows it please drop a comment ….

As some might know Debian Setup CD is running as its basis environment busybox; system tools there provided whether choosing boot the Recovery Console are good mostly for installing or re-installing Debian, but doesn’t include any way to allow one to do remote system recovery over SSH connection.

Further on, have instructed Alex, brought up the Network Interfacse on the system with ifconfig using cmds:

# /sbin/ifconfig MY_IP netmask 255.255.255.240

# /sbin/route add default gw MY_GATEWAY_IP;

BTW, I have previously blogged on how to bring network interfaces with ifconfig here

Though the LAN Interfaces were up after that and I could ping ($ ping www.pc-freak.net) this was of not much use, as I couldn’t log in. Neither somehow can access system in a chroot.

I did thoroughfully explained Alex, how to fix the un-chroot-table badly broken (mounted) system. ….

In order to have accessed the system via SSH, after a bit of research I’ve asked Alex to download and boot from the CD Drive Debian Linux based AMD64 Rescue CD available here ….

Using this much better rescue CD than default Debian Install CD1, thanks God, Alex was able to bring up a working sshd server.

To let me access the rescue CD, Alex changed root pass to a trivial one with usual:

# passwd root

....

Then finally I logged in on host via ssh. Since chroot over the mounted /vev/sda1 in /tmp/aaa was impossible due to a missing working /bin/bash – Here just try imagine how messed up this system was!!!, I asked Alex to copy over the basic system files from the Rescue CD with cp copy command within /tmp/aaa/. The commands I asked him to execute to override some of the old messed up Linux files were:

# cp -rpf /lib/* /tmp/aaa/lib

# cp -rpf /usr/lib/* /tmp/aaa/usr/lib

# cp -rpf /lib32/* /tmp/aaa/lib32

# cp -rpf /bin/* /tmp/aaa/bin

# cp -rpf /usr/lib64/* /tmp/aaa/usr/lib64

# cp -rpf /sbin/* /tmp/aaa/sbin

# cp -rpf /usr/sbin/* /tmp/aaa/usr/sbin

After this at least chroot /tmp/aaa worked!! Thanks God!

I also said Alex to try bootstrap to install a base debian system files inside the broken /tmp/aaa, but this didn’t make things better (so I’m not sure if debootstrap helped or made things worse)??. Exact bootstrap command tried on the host was:

# debootstrap --arch amd64 squeeze /tmp/aaa http://ftp.us.debian.org/debian

This command as explained in Debian Wiki Debootstrap section is supposed to download and override basis Linux system with working base bins and libs.

After I logged in over ssh, I’ve entered chroot-ing and following instructions of 2 of my previous articles:

1. How to do proper chroot and recover broken Ubuntu using mount and chrooting

2. How to mount /proc and /dev and in chroot on Linux – for fail system recovery

Next on, after logging in via ssh I chrooted to mounted system;

# mount /dev/sda1 /mnt/aaa

# chroot /mnt/aaa

Inside chrooted environment, I tried running ssh server, listen on separate port 2208 with command:

# /usr/sbin/sshd -p 2208

sshd did not start up but spitted mer error: PRNG is not seeded, after reading a bit online I’ve found others experiencing PRNG is not seeded err in thread here

The PRNG is not seeded error is caused due to a missing /dev/urandom inside the chroot-ed environment:

# ls -al /dev/urandom

ls: cannot access /dev/urandom: No such file or directory

To solve it, one has to create /dev/urandom with mknod command:

# mknod /dev/urandom c 1 9

….

Something else worthy to mention is very helpful post found on noah.org explaining few basic things on apt, aptitude and dpkg which helped me over the whole severe failed dependency apt-get issues experienced inside chroot.

Inside the chroot, I tried using few usual apt-get cmds to solve the multiple appearing broken packages inter-dependency. I tried:

# apt-get update

....

# apt-get --yes upgrade

# apt-get -f install

Even before that apt, package was broken, so I instructed Alex, to download me one from a web link. By mistake I gave him, a Debian Etch apt version instead of Debian Squeze. So using once again dpkg -i apt* after downloading the latest stable apt deb binaries from debian.org, I had to re-install apt-get…

Besides that Alex, had copied a bunch of libraries, straight copied from my notebook running amd64 Debian Squeeze and has to place all this transferred binaries in /mnt/aaa/{lib,usr/lib} in order to solve missing libraries for proper apt-get operation.

As it seemed slightly impossible fix the broken dependencies with apt-get, I first tried fixing failed inter-dependencies using the other automated dependency solver tool (written in perl language) aptitude. I tried with it solving the situation issuing:

# aptitute update

# aptitude safe-upgrade

# aptitude safe-upgrade --full-resolver

No of the above aptitude command options helped anyhow, so

I’ve decided to try the old but gold approach of combining common logic with a bit of shell scripting 🙂

Here is my customly invented approach 🙂 :

1. Inside the chroot, make a dump of all installed deb packages names in a file

2. Outside the chroot straight ssh-ing again to the Rescucd shell, use RescueCD apt-get to only download all amd64 binaries corresponding to dumped packages names

3. Move all downloaded only apt-get binaries from /var/cache/apt/archives to /mnt/aaa/var/cache/apt/archives

4. Inside chroot, run cd to /var/cache/apt/archives/ and use for bash loop to install each package with dpkg -i

Inside Chroot-ed environment chroot /tmp/aaa, dpkg – to dump list of all installed i386 previous packages on broken system:

# dpkg -l|awk '{ print $2 }' >> /mnt/aaa/root/all_deb_packages_list.txt

Thereon, I delete first 5 lines in beginning of file (2 empty lines) and 3 lines with content:

Status=Not/Inst/Conf-files/Unpacked/halF-conf/Half-inst/trig-aWait/Trig-pend

Err?=(none)/Reinst-required

Name

should be deleted.

Onwards outside of chroot-ed env, I downloaded all deb packages corresponding to previous ones in all_deb_packges.txt:

# mkdir /tmp/apt

# cd /tmp/apt

# for i in $(cat /mnt/aaa/root/all_deb_packages.txt; do \

apt-get --download-only install -yy $i \

....

.....

done

In a while after 30 / 40 minutes all amd64 .deb packages were downloaded in rescuecd /var/cache/apt/archives/.

/var/cache/apt/archives/ in LiveCDs is stored in system memory, thanksfully I have 8 Gigabytes of memory on the host so memory was more than enough to store all packs 😉

Once above loop, completed. I copied all debs to /mnt/aaa/var/cache/apt, i.e.:

# cp -vrpf /var/cache/apt/archives/*.deb /mnt/aaa/var/cache/apt/archives/

Then back in the (chroot-ed broken system), in another ssh session chroot /mnt/aaa, I run another shell loop aim-ing to install each copied deb package (below command should run after chroot-ing):

# cd /var/cache/apt/archives

# for i in *.deb; do \

dpkg -i $i

done

I had on the system installed Qmail server which was previously linked against old 32 bit installed libs, so in my case was also necessery rebuild qmail install as well as ucsp-tcp and ucsp-ssl, after rebooting and booting the finally working amd64 libs system (after reboot and proper boot!):

a) to Re-compile qmail base binaries, had to issue:

# qmailctl stop

# cd /usr/src/qmail

# make clean

# make man

# make setup check

b) to re-compile ucspi-tcp and ucspi-ssl:

# rm -rf /packages/ucspi-ssl-0.70.2/

#mkdir /packages

# chmod 1755 /packages

# cd /tmp

# tar -zxvf /downloads/ucspi-ssl-0.70.2.tar.gz

....

# mv /tmp/host/superscript.com/net/ucspi-ssl-0.70.2/ /packages

# cd /packages/ucspi-ssl-0.70.2/

# rm -rf /tmp/host/

# sed -i 's/local\///' src/conf-tcpbin

# sed -i 's/usr\/local/etc/' src/conf-cadir

# sed -i 's/usr\/local\/ssl\/pem/etc\/ssl/' src/conf-dhfile

# openssl dhparam -check -text -5 1024 -out /etc/ssl/dh1024.pem

Then had to stop temporary daemontools service, through commenting line in /etc/inittab:

# SV:123456:respawn:/usr/bin/svscanboot

# init q

After that remove commented line:

SV:123456:respawn:/usr/bin/svscanboot

and consequentually install ucsp-{tcp,ssl}:

# cd /packages/ucspi-ssl-0.70.2/

# package/compile

# package/rts

# package/install

c) Rebuild Courier-Imap and CourierImapSSL

As I have custom compiled Courier-IMAP and Courier-IMAPSSL it was necessery to rebuild Courier-imaps following steps earlier explained in this article

I have on the system running DjbDNS as local caching server so I had to also re-install djbdns, re-compiling it from source

Finally after restart the system booted OKAY!! Thanks God!!!!!! 🙂

Further on to check the boot-ed system runs 64 bit architecture dpkg should be used

To check if the system architecture is 64 now 64 bit, there is a command dpkg-architecture, as I learned from superuser.com forums thread here

root@pcfreak:~# dpkg-architecture -qDEB_HOST_ARCH

amd64

One more thing, which helped me a lot during the whole system recovery was main Debian deb HTTP repositories ftp.us.debian.org/debian/pool/ , I’ve downloaded apt (amd64 Squeeze) version and few other packages from there.

Hope this article helps someone who end up in 32 to 64 bit debian arch upgrade. Enjoy 🙂