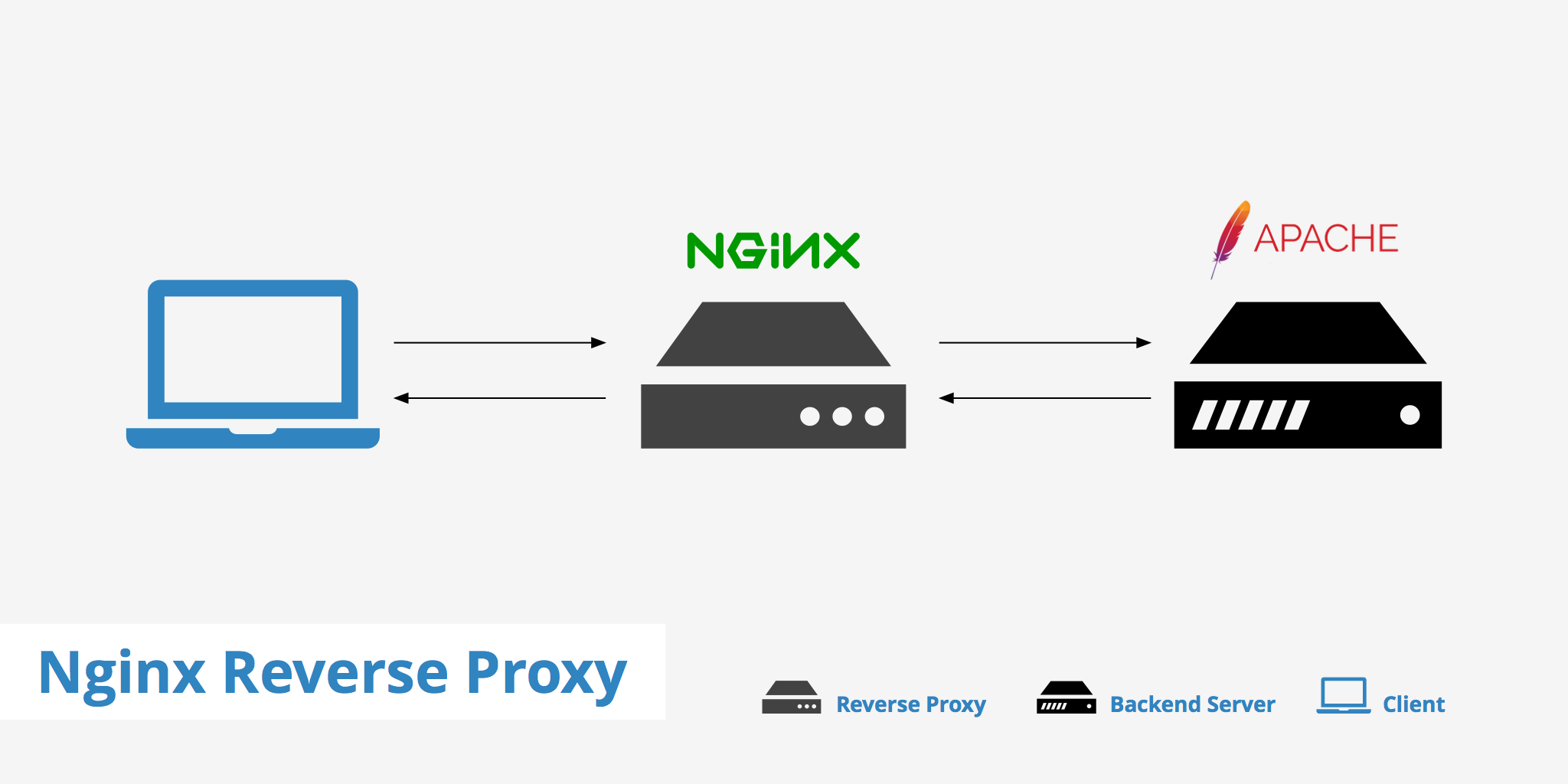

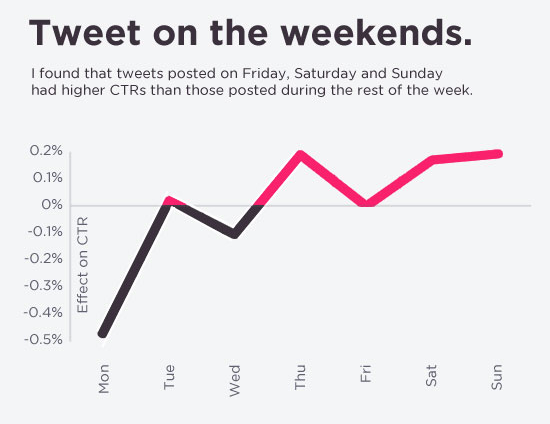

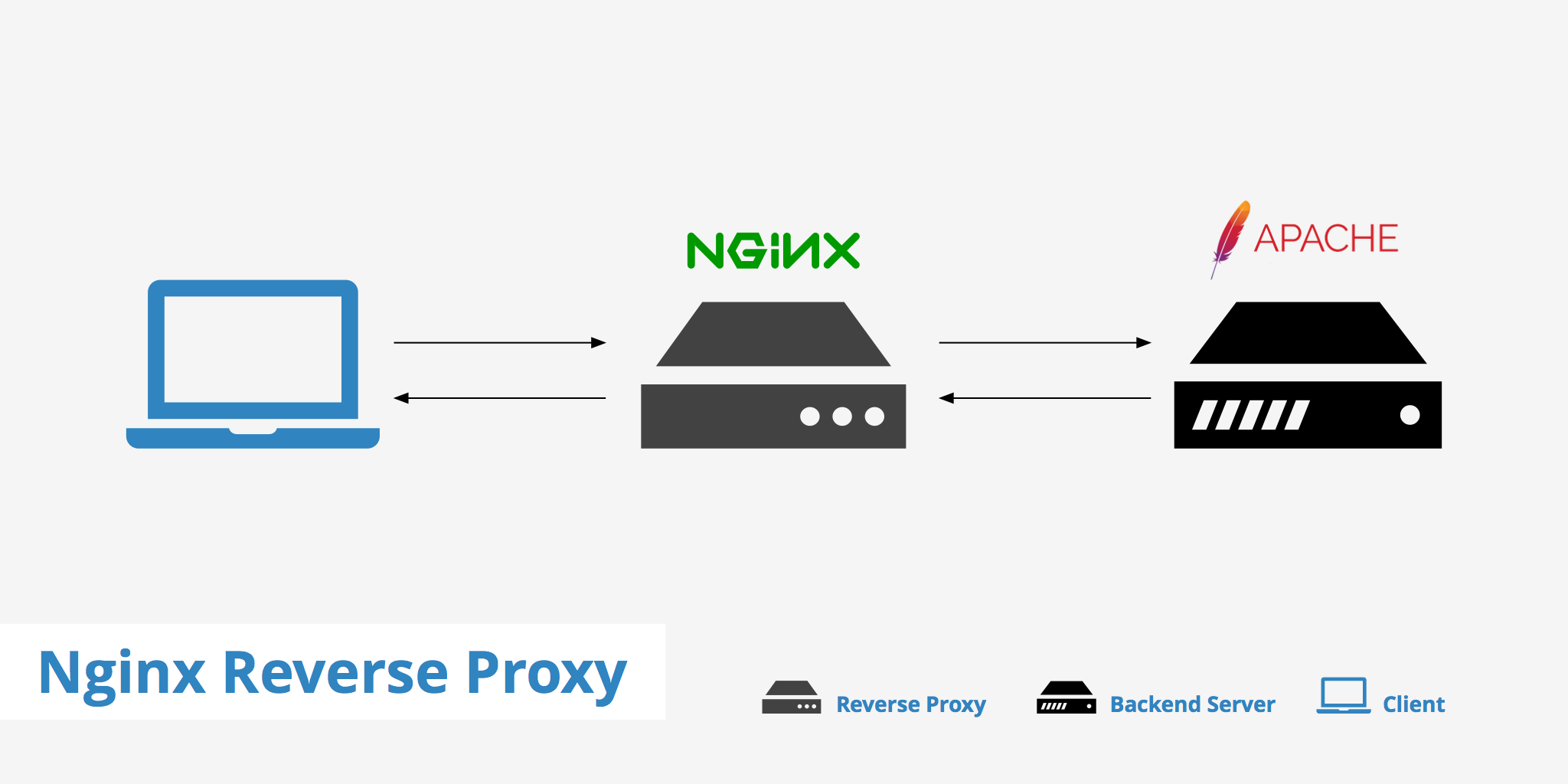

What is reverse Proxy?

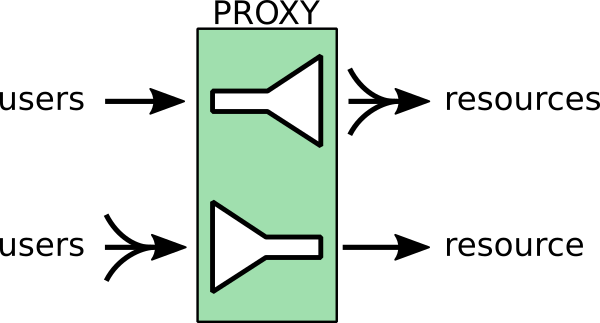

Reverse Proxy (RP) is a Proxy server which routes all incoming traffic to secondary Webserver situated behind the Reverse Proxy site.

Then all incoming replies from secondary webserver (which is not visible) from the internet gets routed back to Reverse Proxy service. The result is it seems like all incoming and outgoing HTTP requests are served from Reverse Proxy host where in reality, reverse proxy host just does traffic redirection. Problem with reverse proxies is it is one more point of failure the good side of it can protect and route only certain traffic to your webserver, preventing the behind reverse proxy located server from crackers malicious HTTP requests.

Treverse proxy, which accepts all traffic and forwards it to a specific resource, like a server or container. Earlier I've blogged on how to create Apache reverse Proxy to Tomcat.

Having a reverse proxy with apache is a classical scenarios however as NGINX is taking lead slowly and overthrowing apache's use due to its easiness to configure, its high reliability and less consumption of resources.

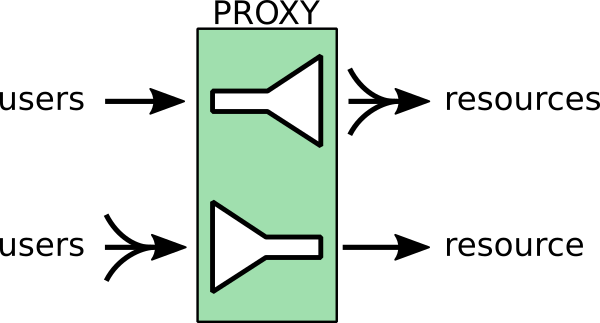

One of most common use of Reverse Proxy is to use it as a software Load Balancer for traffic towards another webserver or directly to a backend server. Using RP as a to mitigate DDoS attacks from hacked computers Bot nets (coming from a network range) is very common Anti-DDoS protection measure.

With the bloom of VM and Contrainerizations technology such as docker. And the trend to switch services towards micro-services, often reverse proxy is used to seamessly redirect incoming requests traff to multiple separate OS docker running containers etc.

Some of the other security pros of using a Reverse proxy that could be pointed are:

- Restrict access to locations that may be obvious targets for brute-force attacks, reducing the effectiveness of DDOS attacks by limiting the number of connections and the download rate per IP address.

- Cache pre-rendered versions of popular pages to speed up page load times.

- Interfere with other unwanted traffic when needed.

1. Install nginx webserver

Assuming you have a Debian at hand as an OS which will be used for Nginx RP host, lets install nginx.

[hipo@proxy ~]$ sudo su – root

…

[root@proxy ~]# apt update

…

[root@proxy ~]# apt install -y nginx

…

Fedora / CentOS / Redhat Linux could use yum or dnf to install NGINX

[root@proxy ~]# dnf install -y nginx

[root@proxy ~]# yum install -y nginx

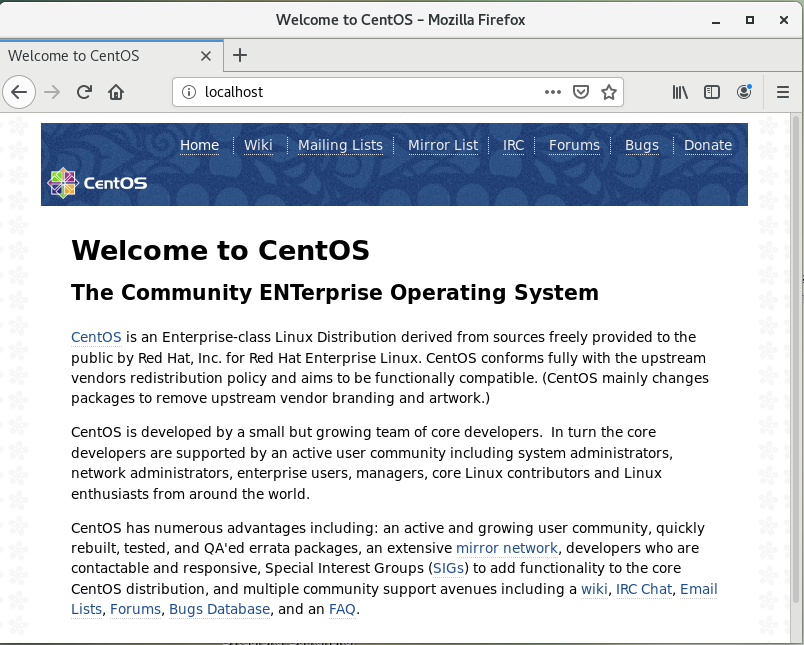

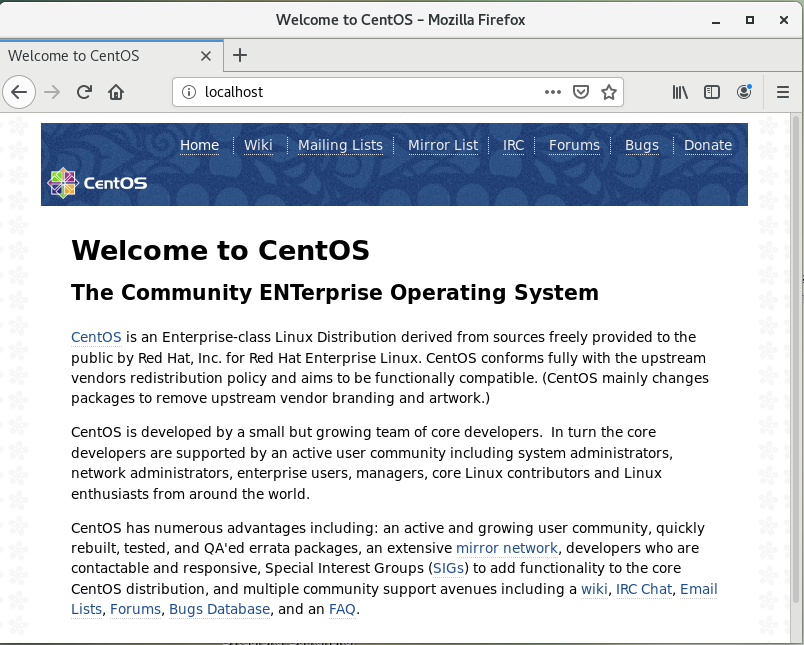

2. Launch nginx for a first time and test

Start nginx one time to test default configuration is reachable

systemctl enable –now nginx

To test nginx works out of the box right after install, open a browser and go to http://localhost if you have X or use text based browser such as lynx or some web console fetcher as curl to verify that the web server is running as expected.

3. Create Reverse proxy configuration file

Remove default Nginx package provided configuration

As of 2020 by default nginx does load configuration from file /etc/nginx/sites-enabled/default on DEB based Linuxes and in /etc/nginx/nginx.conf on RPM based ones, therefore to setup our desired configuration and disable default domain config to be loaded we have to unlink on Debian

[root@proxy ~]# unlink /etc/nginx/sites-enabled/default

or move out the original nginx.conf on Fedora / CentOS / RHEL:

[root@proxy ~]# mv /etc/nginx/nginx.conf /etc/nginx/nginx.conf-distro

Lets take a scenario where you have a local IP address that's NAT-ted ot DMZ-ed and and you want to run nginx to server as a reverse proxy to accelerate traffic and forward all traffic to another webserver such as LigHTTPD / Apache or towards java serving Application server Jboss / Tomcat etc that listens on the same host on lets say on port 8000 accessible via app server from /application/.

To do so prepare new /etc/nginx/nginx.conf to look like so

[root@proxy ~]# mv /etc/nginx/nginx.conf /etc/nginx.conf.bak

[root@proxy ~]# vim /etc/nginx/nginx.conf

user nginx;

worker_processes auto;

worker_rlimit_nofile 10240;

pid /run/nginx.pid;

include /etc/nginx/modules-enabled/*.conf;

events {

# worker_connections 768;

worker_connections 4096;

multi_accept on;

# multi_accept on;

}

http {

##

# Basic Settings

##

sendfile on;

tcp_nopush on;

tcp_nodelay on;

#keepalive_timeout 65;

keepalive_requests 1024;

client_header_timeout 30;

client_body_timeout 30;

keepalive_timeout 30;

types_hash_max_size 2048;

# server_tokens off;

# server_names_hash_bucket_size 64;

# server_name_in_redirect off;

include /etc/nginx/mime.types;

default_type application/octet-stream;

##

# SSL Settings

##

ssl_protocols TLSv1 TLSv1.1 TLSv1.2; # Dropping SSLv3, ref: POODLE

ssl_prefer_server_ciphers on;

##

# Logging Settings

##

access_log /var/log/nginx/domain.com/access.log;

error_log /var/log/nginx/domain.com/error.log;

##

# Gzip Settings

##

gzip on;

# gzip_vary on;

# gzip_proxied any;

# gzip_comp_level 6;

# gzip_buffers 16 8k;

# gzip_http_version 1.1;

# gzip_types text/plain text/css application/json application/javascript text/xml application/xml application/xml+rss text/javascript;

##

# Virtual Host Configs

##

include /etc/nginx/conf.d/*.conf;

include /etc/nginx/sites-enabled/*;

include /etc/nginx/default.d/*.conf;

upstream myapp {

server 127.0.0.1:8000 weight=3;

server 127.0.0.1:8001;

server 127.0.0.1:8002;

server 127.0.0.1:8003;

# Uncomment and use Load balancing with external FQDNs if needed

# server srv1.example.com;

# server srv2.example.com;

# server srv3.example.co

}

#mail {

# # See sample authentication script at:

# # http://wiki.nginx.org/ImapAuthenticateWithApachePhpScript

#

# # auth_http localhost/auth.php;

# # pop3_capabilities "TOP" "USER";

# # imap_capabilities "IMAP4rev1" "UIDPLUS";

#

# server {

# listen localhost:110;

# protocol pop3;

# proxy on;

# }

#

# server {

# listen localhost:143;

# protocol imap;

# proxy on;

# }

#}

In the example above, there are 3 instances of the same application running on 3 IPs on different ports, just for the sake to illustrate Fully Qualified Domain Names (FQDNs) Load balancing is also supported you can see the 3 commented application instances srv1-srv3.

When the load balancing method is not specifically configured, it defaults to round-robin. All requests are proxied to the server group myapp1, and nginx applies HTTP load balancing to distribute the requests.Reverse proxy implementation in nginx includes load balancing for HTTP, HTTPS, FastCGI, uwsgi, SCGI, memcached, and gRPC.

To configure load balancing for HTTPS instead of HTTP, just use “https” as the protocol.

To download above nginx.conf configured for High traffic servers and supports Nginx virtualhosts click here.

Now lets prepare for the reverse proxy nginx configuration a separate file under /etc/nginx/default.d/ all files stored there with .conf extension are to be red by nginx.conf as instructed by /etc/nginx/nginx.conf :

We'll need prepare a sample nginx

[root@proxy ~]# vim /etc/nginx/sites-available/reverse-proxy.conf

server {

listen 80;

listen [::]:80;

server_name domain.com www.domain.com;

#index index.php;

# fallback for index.php usually this one is never used

root /var/www/domain.com/public ;

#location / {

#try_files $uri $uri/ /index.php?$query_string;

#}

location / {

proxy_pass http://127.0.0.1:8080;

}

location /application {

proxy_pass http://domain.com/application/ ;

proxy_http_version 1.1;

proxy_cache_bypass $http_upgrade;

# Proxy headers

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header X-Forwarded-Host $host;

proxy_set_header X-Forwarded-Port $server_port;

# Proxy timeouts

proxy_connect_timeout 60s;

proxy_send_timeout 60s;

proxy_read_timeout 60s;

access_log /var/log/nginx/reverse-access.log;

error_log /var/log/nginx/reverse-error.log;

}

##listen 443 ssl;

## ssl_certificate /etc/letsencrypt/live/domain.com/fullchain.pem;

## ssl_certificate_key /etc/letsencrypt/live/domain.com/privkey.pem;

## include /etc/letsencrypt/options-ssl-nginx.conf;

## ssl_dhparam /etc/letsencrypt/ssl-dhparams.pem;

}

Get above reverse-proxy.conf from here

As you see config makes all incoming traffic towards root ( / ) NGINX directory for domain http://domain.com on port 80 on Nginx Webserver to be passed on http://127.0.0.1:8000/application.

location / {

proxy_pass http://127.0.0.1:8000;

}

Another set of configuration has configuration domain.com/application to reverse proxy to Webserver on Port 8080 /application.

location /application {

proxy_pass http://domain.com/application/ ;

proxy_http_version 1.1;

proxy_cache_bypass $http_upgrade;

# Proxy headers

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header X-Forwarded-Host $host;

proxy_set_header X-Forwarded-Port $server_port;

# Proxy timeouts

proxy_connect_timeout 60s;

proxy_send_timeout 60s;

proxy_read_timeout 60s;

access_log /var/log/nginx/reverse-access.log;

error_log /var/log/nginx/reverse-error.log;

}

– Enable new configuration to be active in NGINX

[root@proxy ~]# ln -s /etc/nginx/sites-available/reverse-proxy.conf /etc/nginx/sites-enabled/reverse-proxy.conf

4. Test reverse proxy nginx config for syntax errors

[root@proxy ~]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

Test connectivity to listen external IP address

5. Enable nginx SSL letsencrypt certificates support

[root@proxy ~]# apt-get update

[root@proxy ~]# apt-get install software-properties-common

[root@proxy ~]# apt-get update

[root@proxy ~]# apt-get install python-certbot-nginx

6. Generate NGINX Letsencrypt certificates

[root@proxy ~]# certbot –nginx

Saving debug log to /var/log/letsencrypt/letsencrypt.log

Plugins selected: Authenticator nginx, Installer nginx

Which names would you like to activate HTTPS for?

– – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – –

1: your.domain.com

– – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – –

Select the appropriate numbers separated by commas and/or spaces, or leave input

blank to select all options shown (Enter 'c' to cancel): 1

Obtaining a new certificate

Performing the following challenges:

http-01 challenge for your.domain.com

Waiting for verification…

Cleaning up challenges

Deploying Certificate to VirtualHost /etc/nginx/sites-enabled/reverse-proxy.conf

Please choose whether or not to redirect HTTP traffic to HTTPS, removing HTTP access.

– – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – –

1: No redirect – Make no further changes to the webserver configuration.

2: Redirect – Make all requests redirect to secure HTTPS access. Choose this for

new sites, or if you're confident your site works on HTTPS. You can undo this

change by editing your web server's configuration.

– – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – –

Select the appropriate number [1-2] then [enter] (press 'c' to cancel): 2

Redirecting all traffic on port 80 to ssl in /etc/nginx/sites-enabled/reverse-proxy.conf

– – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – –

Congratulations! You have successfully enabled https://your.domain.com

You should test your configuration at:

https://www.ssllabs.com/ssltest/analyze.html?d=your.domain.com

– – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – –

7. Set NGINX Reverse Proxy to auto-start on Linux server boot

On most modern Linux distros use systemctl for legacy machines depending on the Linux distribution use the addequate runlevel /etc/rc3.d/ symlink link on Debian based distros on Fedoras / CentOS / RHEL and other RPM based ones use chkconfig RedHat command.

[root@proxy ~]# systemctl start nginx

[root@proxy ~]# systemctl enable nginx

8. Fixing weird connection permission denied errors

If you get a weird permission denied errors right after you have configured the ProxyPass on Nginx and you're wondering what is causing it you have to know by default on CentOS 7 and RHEL you'll get this errors due to automatically enabled OS selinux security hardening.

If this is the case after you setup Nginx + HTTPD or whatever application server you will get errors in /var/log/nginx.log like:

2020/12/14 07:46:01 [crit] 7626#0: *1 connect() to 127.0.0.1:8080 failed (13: Permission denied) while connecting to upstream, client: 127.0.0.1, server: , request: "GET / HTTP/1.1", upstream: "http://127.0.0.1:8080/", host: "localhost"

2020/12/14 07:46:01 [crit] 7626#0: *1 connect() to 127.0.0.1:8080 failed (13: Permission denied) while connecting to upstream, client: 127.0.0.1, server: , request: "GET / HTTP/1.1", upstream: "http://127.0.0.1:8080/", host: "localhost"

2020/12/14 07:46:01 [crit] 7626#0: *1 connect() to 127.0.0.1:8080 failed (13: Permission denied) while connecting to upstream, client: 127.0.0.1, server: , request: "GET / HTTP/1.1", upstream: "http://127.0.0.1:8080/", host: "localhost"

2020/12/14 07:46:02 [crit] 7626#0: *1 connect() to 127.0.0.1:8080 failed (13: Permission denied) while connecting to upstream, client: 127.0.0.1, server: , request: "GET / HTTP/1.1", upstream: "http://127.0.0.1:8080/", host: "localhost"

The solution to proxy_pass weird permission denied errors is to turn off selinux

[root@proxy ~]# setsebool -P httpd_can_network_connect 1

To permanently allow nginx and httpd

[root@proxy ~]# cat /var/log/audit/audit.log | grep nginx | grep denied | audit2allow -M mynginx

[root@proxy ~]# semodule -i mynginx.pp

[root@proxy ~]# cat /var/log/audit/audit.log | grep httpd | grep denied | audit2allow -M myhttpd

[root@proxy ~]# semodule -i myhttpd.pp

Then to let know nginx and httpd (or whatever else app you run) be aware of new settings restart both

[root@proxy ~]# systemctl restart nginx

[root@proxy ~]# systemctl restart httpd

No Comments »