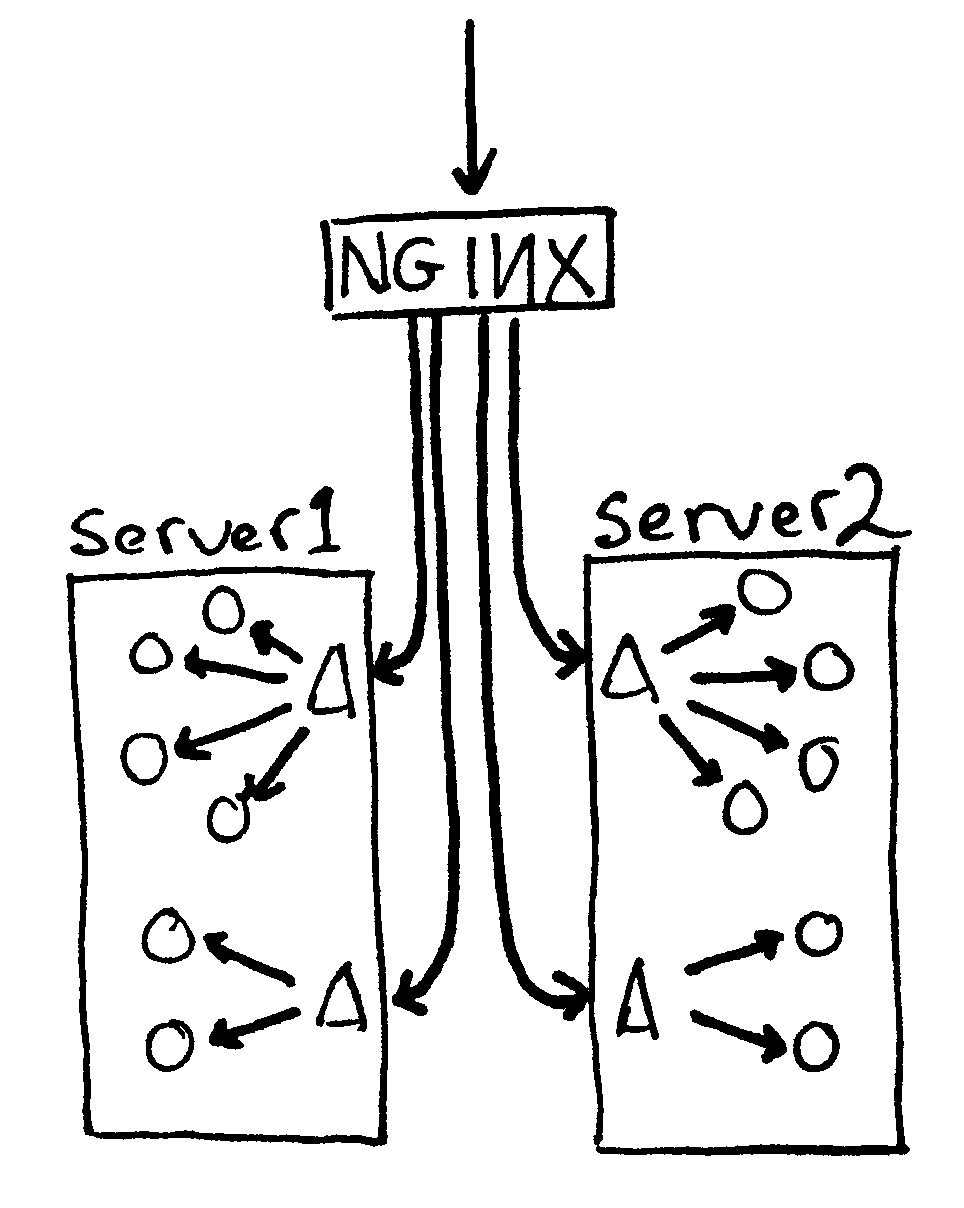

In previous article I explained how Load Balancing is done with Apache. Load balancing is distribution of Webserver incoming traffic to several Webservers behind the load balancer. Anyone who targets higher webserver availability application redundancy and fault tolerance should certainly start using Load Balancing immediately.Most common load balancing is Round Robin. Though Apache can be used for Load balancing it is not designed with Load balancing in mind thus using Apache for load balancing is probably not efficient. Actually Apache is not considered a standard solution for Load balancing. As I'm lately deepening my interest in balancers I decided to see how to configure Load balancing with Nginx Web Server. Nginx is known for its blazing speed and nowadays many Hosting providers prefer to use it fully instead of Apache. Thus I will explain herein how Load balancing can be configured with Nginx. For sake of installing Nginx I use Debian GNU / Linux.

1. Installing Nginx webserver

loadbalancer:~# apt-get install --yes nginx

....

Once installed we have to proceed with configuring Nginx to do Round Robin load balancing – for this NGINX has module handing it.

2. Configuring Nginx for load balancing

Next its necessary set all hosts between load balancing will be done through NGINX upstream load balancing module

a) Configuring Nginx to handle traffic equally between hosts

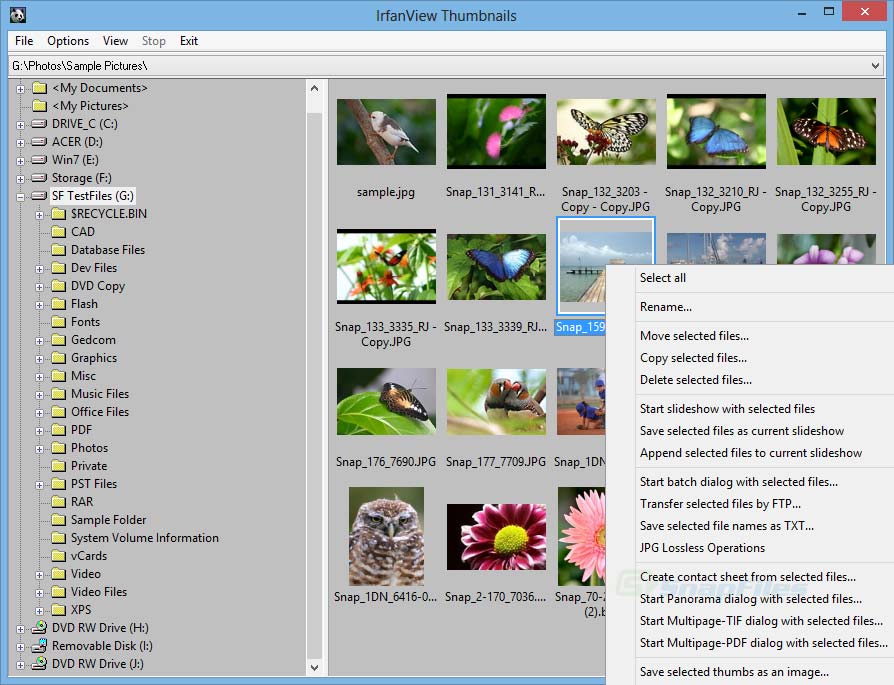

loadbalancer:~# vim /etc/nginx/sites-available/default

upstream backend {

server backend1.loadbalanced-server.com

server backend2.loadbalanced-server.com

server backend3.loadbalanced-server.com

server backend4.loadbalanced-server.com

}

Upsing above configuration incoming HTTP traffic will be handled equally between backend1 … backend4 servers. This assumes the hosts running 4 balanced webservers are some similar or identical hardware configuration machines with identical file content. If this is not so and the 4 hosts differ in CPU power and server Memory. There is possibility to use machine weighting factor with weight variable;

b) Using weight factor to set different traffic distribution

To specify a proportion of traffic one of the 4 above hosts will get use;

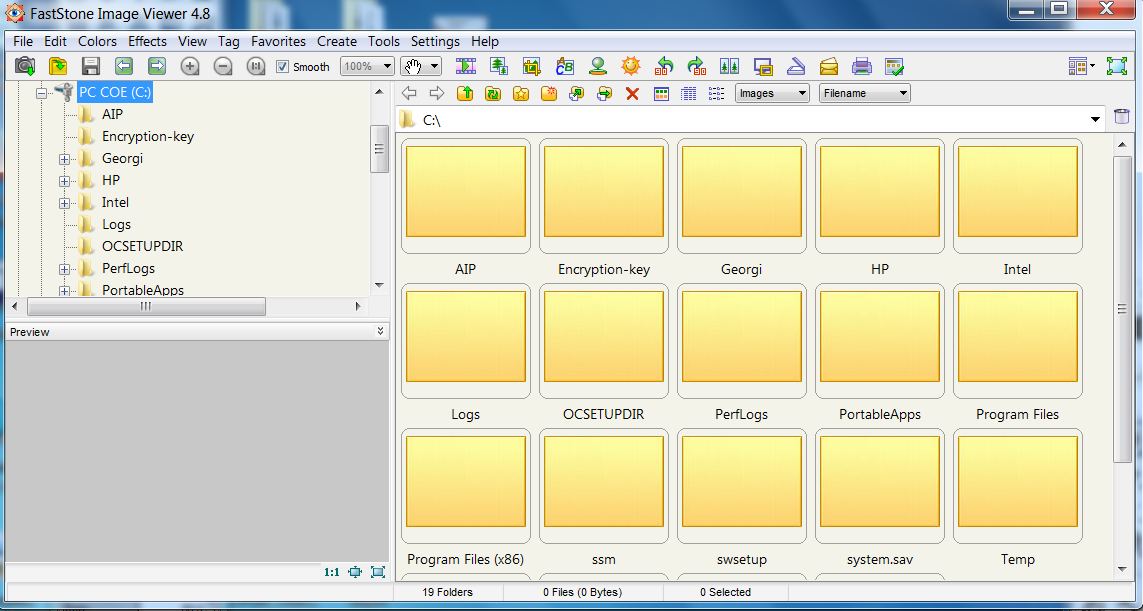

upstream backend {

server backend1.loadbalanced-server.com weight=1

server backend2.loadbalanced-server.com weight=2

server backend3.loadbalanced-server.com weight=3

server backend4.loadbalanced-server.com weight=4

}

weight=1 is default variable the rest of weight set in example (weight=2,weight=3,weight=4) , meaning is as follows:

backend2.loadbalancer-server.com weight=2 – Webserver will receive 2 as much HTTP traffic as backend1

backend3.loadbalancer-server.com weight=3 – Webserver will receive 3 times the traffic of backend1

backend4.loadbalancer-server.com weight=3 – Webserver will get 4 times the traffic of backend1

In some cases it is better to supply IP addresses instead of backend1.loadbalanced-server.com … backend4, this will prevent any possible issues with DNS.

c) Setting checks for balanced webserver to be considered unresponsive

Two very useful settings when configuring a group of webserver manging HTTP are;

- max_fails

and

- max_timeouts

This ones are good to be added in case if there is a chance that one or more of balanced servers dies / hangs up etc. for some unknown reason. If that happens part of HTTP traffic will continue to be handled to it and part of clients will not get responce sometimes. Configurations are useful if you happen to have a webserver which tend to be periodically dying for unknown reasons.

To avoid such situations use configuration like so:

upstream backend {

server backend1.loadbalanced-server.com max_fails=5 fail_timeout=15s

server backend2.loadbalanced-server.com weight=2

server backend3.loadbalanced-server.com weight=3

server backend4.loadbalanced-server.com weight=4

}

max_fails=3 instructs Nginx balancer to make maximum 5 requests to backend1.loadbalancer-server.com each one active for maximum of 15 seconds. Whether this condition matches Nginx will consider backend1.loadbalanced-server.com as unresponsive ( down ) and will stop to deliver traffic further to it.

Then finally, we need to make load balancing active via proxy_pass by adding in config:

server {

location / {

proxy_pass http://backend;

}

}

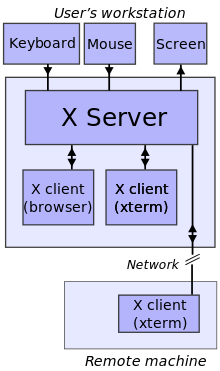

Well that's all though load balancing looks so complex and scary it turns it is a piece of cake. Of course there are plenty of things to learn in field especially if you have to manage behind the load balancer a large farm of Apache webservers. It is possible to also configure a second Nginx as load balancer to guarantee higher redundancy. 2 Nginx load balancer can be configured to work in Active load balancing mode or passive load balancing mode. Just for the curious difference between active load balancing and passive load balancing is;

- Active load balancing the two load balancer webservers handle together incoming requests to load balancer

- Passive load balancing only one load balancer handles HTTP traffic and in case it dies due to overload or hang up the second configured Nginx load balancer starts serving requests.

There pretty more to be said on Load balancing but I guess this article will be a good startup for people who want to start playing with Nginx load balancing. Enjoy!