It’s the feast of the glorious prophet Elijah in the Orthodox Church. Every year on the 20-th of June we do celebrate the feast whether we commemorate in short the glorious life of the prophet with which the mercyful God has bestowed the prophet.

Elijah is actually considered the greatest old testament prophet before the coming of our Lord and Saviour Jesus Christ.

St. Prophet Elias is among the two people who did not died but was taken to heaven, the first one that has not faced physical death but by God’s mercy because of his great righteousness has been taken to heaven is Enoch.

The whole short version of saint Elijah’s life is availabe for reading here

Elijah is very famous for his God inspired “contest” against the Baal Prophets whether he has shown the idolaters who the real Living God is.

Here are a few interesting extracts from the Saint’s Living:

During these two years a famine prevailed in the land. At the close of this period of retirement and of preparation for his work, Elijah met Obadiah, one of Ahab’s officers, whom he had sent out to seek for pasturage for the cattle, and bade him go and tell his master that Elijah was there. The king came and met Elijah, and reproached him as the “troubler of Israel.” It was then proposed that sacrifices should be publicly offered, for the purpose of determining whether Baal or the Israelite God was the true God. This was done on Mount Carmel; the result was that a miracle took place convincing those watching that Baal was false and that the Israelite God was real. The prophets of Baal were then put to death by the order of Elijah.

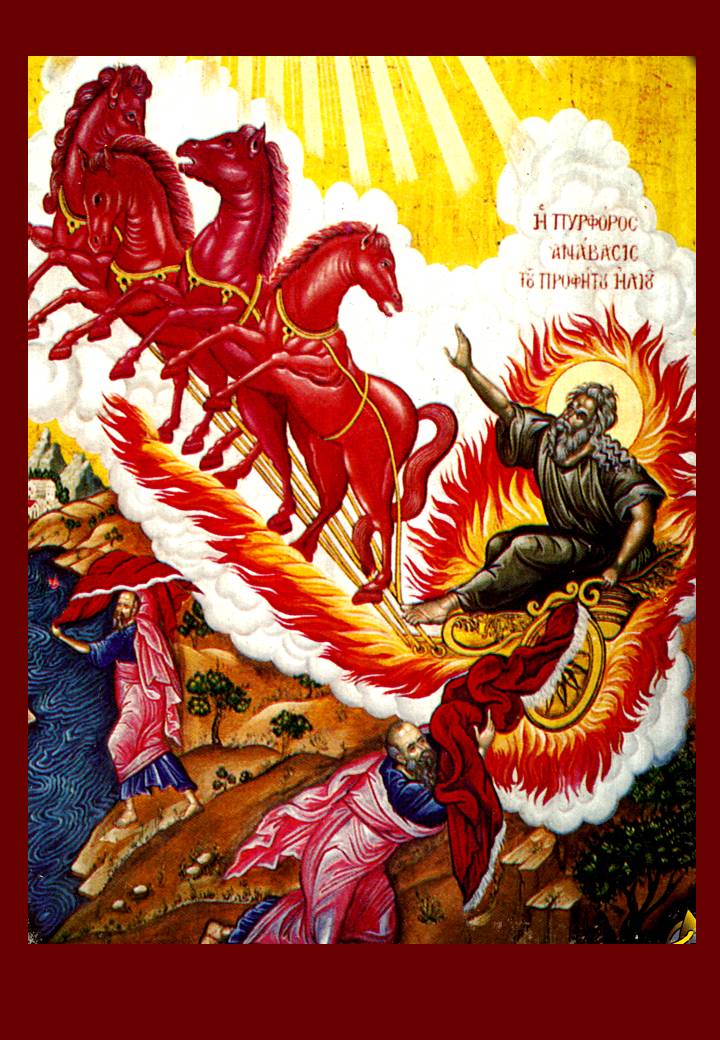

Another very notable moment (and marvelous God’s manifestation in Elijah’s life) is his Glorious take into haven by God Almighty.

God taking Elijah to heaven in a whirlwind by a chariot and horses of fire.

Read the short revised version below:

The time now drew near when he was to be taken up into heaven (2 Kings 2:1-12). He went down to Gilgal, where there was a school of prophets, and where his successor Elisha, whom he had anointed some years before, resided. Elisha was distraught by the thought of his master’s leaving him, and refused to be parted from him. The two went on and came to Bethel and Jericho, and crossed the Jordan, the waters of which were “divided hither and thither” when smitten with Elijah’s mantle. Upon arriving at the borders of Gilead, which Elijah had left many years before, it “came to pass as they still went on and talked” they were suddenly separated by a chariot and horses of fire; and “Elijah went up by a whirlwind into heaven,” Elisha receiving his mantle, which fell from Elijah as he ascended.Elijah’s chosen successor was the prophet Elisha; Elijah designated Elisha as such by leaving his mantle with him (2 Kings 2:13-15), so that his wish for “a double portion” of the older prophet’s spirit (2:9), an allusion to the preference shown the first-born son in the division of the father’s estate (Deuteronomy 21:17), had been fulfilled.